Introduction to AI Agents

What is an AI Agent?

As technology around us continues to advance at an unprecedented pace, AI is being applied across a wider range of fields than ever before. Among the emerging trends, "AI Agents" have drawn significant attention—intelligent assistants capable of making decisions and executing tasks autonomously. By combining Large Language Models (LLMs), knowledge graphs, and external tools, these agents “think” through the optimal procedures for a given goal and then take “action” to accomplish their tasks. In this blog, we will explain what AI Agents are, outline their core mechanisms and potential, and discuss key points for implementation in a clear and accessible way.

How Are They Different from LLMs?

Compared to Large Language Models (LLMs), which have garnered much attention, the defining characteristic of AI Agents is their ability to create plans (Planning) and carry them out (Actions) to achieve a given objective.

Tasks They Excel At:

- Typical LLMs: Single-turn tasks like text generation, Q&A, translation

- AI Agents: Continuous task management, automation, complex workflow execution

Input:

- Typical LLMs: A single prompt

- AI Agents: Multiple instructions and states

Output:

- Typical LLMs: Text or images

- AI Agents: A chain of actions

How They Operate:

- Typical LLMs: Respond immediately based on user prompt

- AI Agents: Decompose tasks and autonomously adjust as they execute

Requirements:

- Typical LLMs: User instructions and appropriate prompts

- AI Agents: Multiple agents, prompts, and task progression management

Why Are AI Agents Trending?

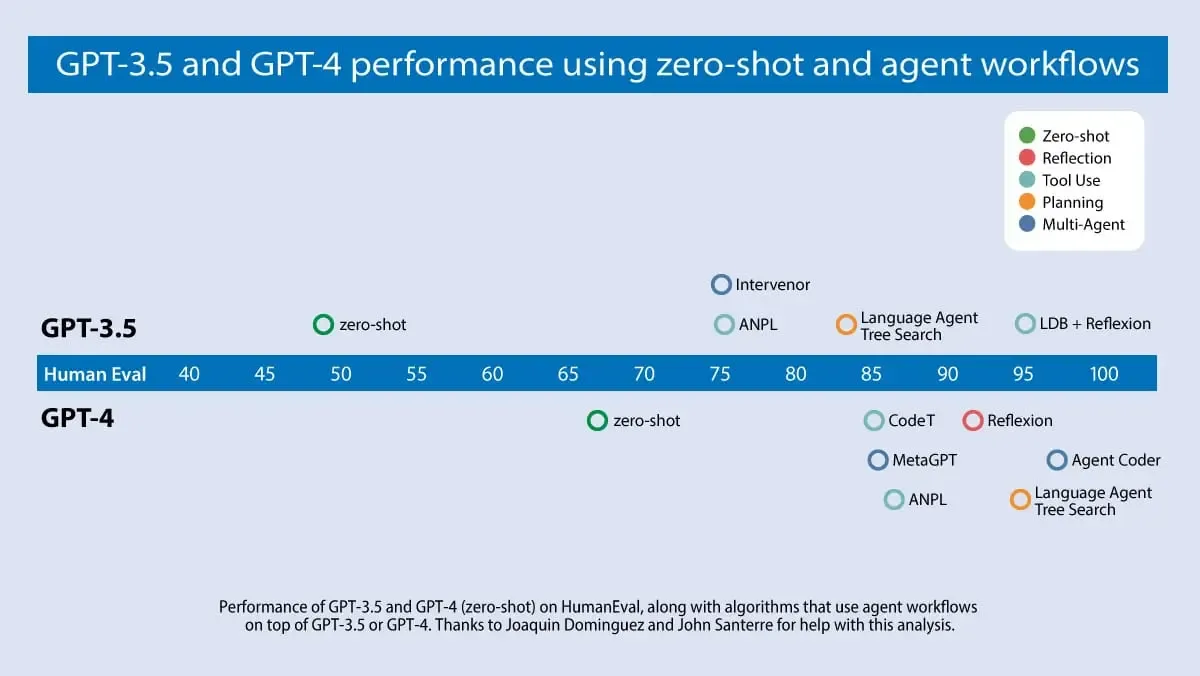

Image source: Andrewng.org

Image source: Andrewng.org

Deep learning expert Andrew Ng points out that incorporating agent workflows may drive greater advancements than next-generation foundational models in improving LLM performance.

For instance, GPT-3.5 achieves a zero-shot code generation accuracy of 48.1% (67% with GPT-4), but this rate can jump to 95.1% by integrating an agent loop. In other words, agent workflows could dramatically boost LLM performance.

Image source: Agentic Design Patterns

Image source: Agentic Design Patterns

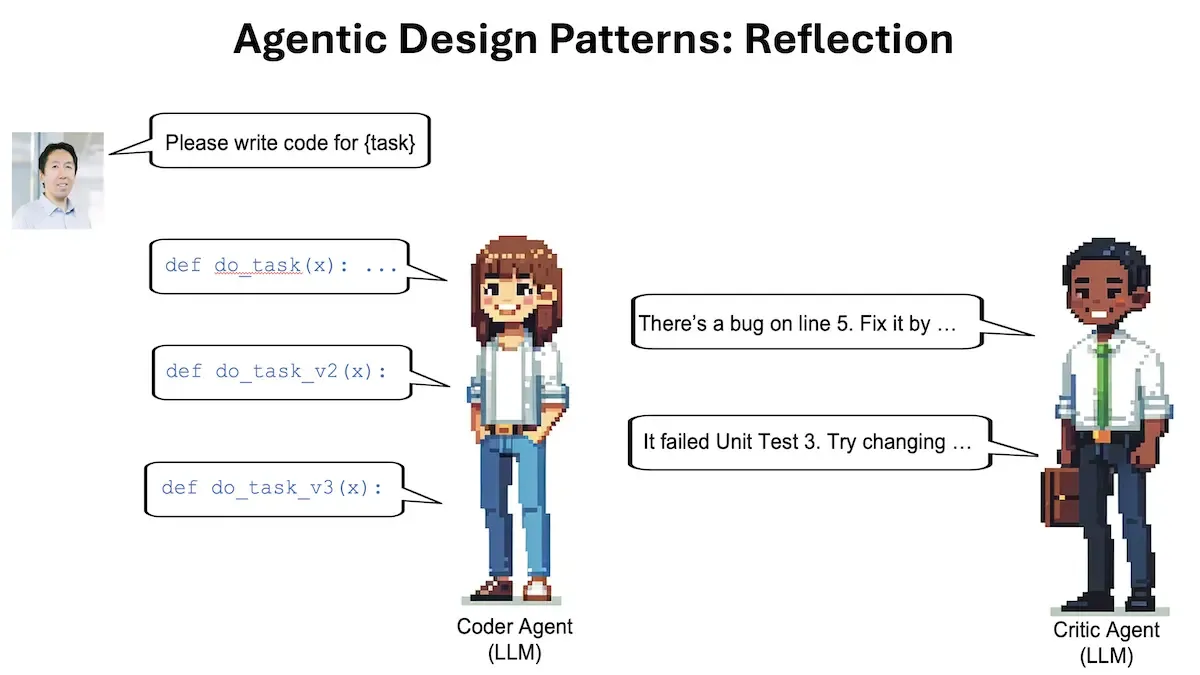

According to Andrew Ng, AI Agents can be implemented with four key elements, referred to as "Design Patterns."

Reflection (Self-Examination):

The LLM reviews its own outputs and identifies improvements. For example, it might re-evaluate generated code, pinpoint errors, and suggest enhancements.

Image source: Agentic Design Patterns

Image source: Agentic Design Patterns

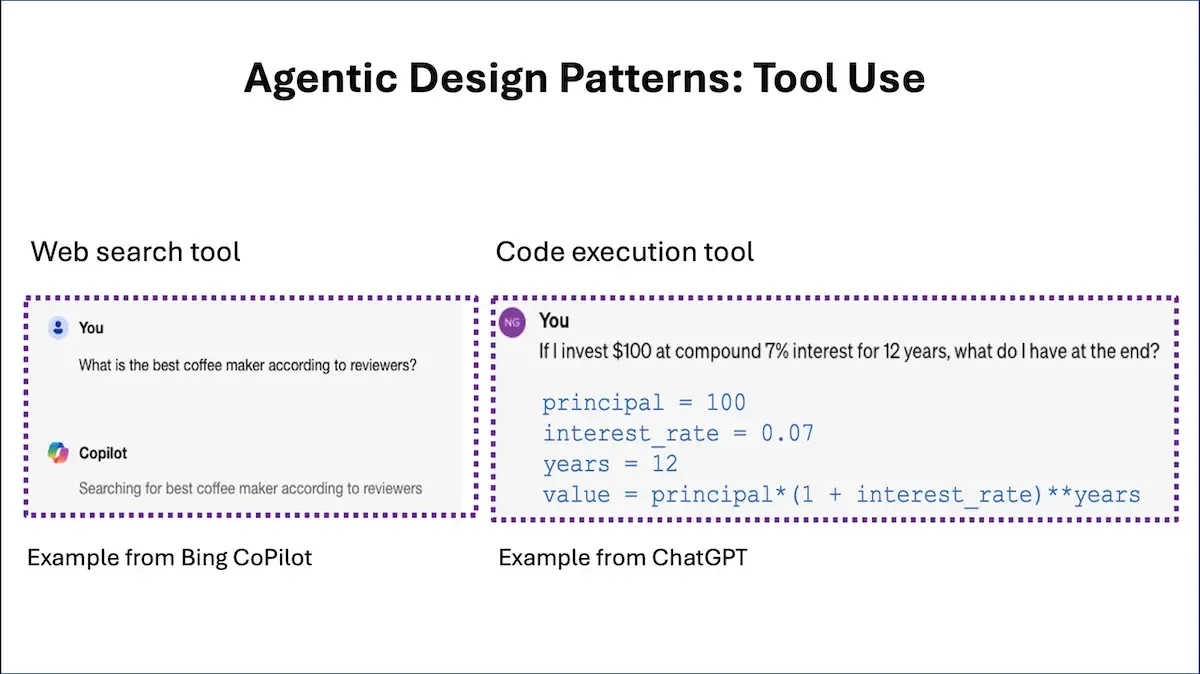

Tool Utilization:

The ability of LLMs to use external tools, such as web searches or code execution, to gather and process information. This allows agents to obtain the latest data and carry out complex tasks without human intervention.

Image source: Agentic Design Patterns

Image source: Agentic Design Patterns

Planning:

The LLM autonomously determines and executes the sequence of steps required to achieve the target goal. By breaking down a complex task into smaller subtasks, it can reach the objective more efficiently. In other words, it makes decisions.

Image source: Agentic Design Patterns

Image source: Agentic Design Patterns

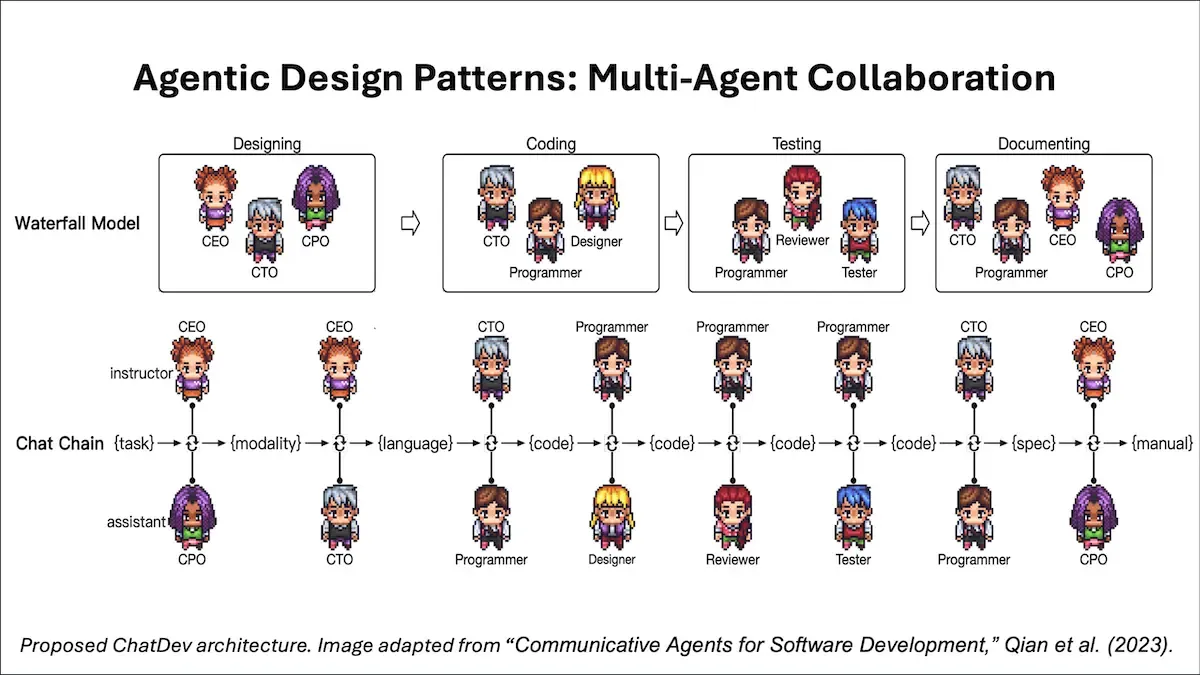

Multi-Agent Collaboration:

Multiple AI agents each take on specialized roles, working together to efficiently solve tasks. Each agent leverages its expertise to deliver the best outcome.

Image source: Agentic Design Patterns

Image source: Agentic Design Patterns

Can AI Agents Do Everything?

AI Agents excel at simple, well-defined tasks, particularly small-scale, repetitive tasks that occur routinely. On the other hand, they are not as suitable for large-scale, infrequent tasks. Since AI Agents cannot handle every kind of task (they are not AGI), it’s important to maintain realistic expectations and understand current limitations.

How to Implement an AI Agent

Implementing AI Agents involves multiple challenges—such as state management, tool execution, data storage, deployment strategies, and framework selection—making it more complex than using LLMs or LMMs alone. Some representative challenges include:

Complex State Management:

- Maintaining conversation history and long-term memory

- Managing the “Agentic Loop” (repeated LLM calls)

Safe Actions:

- Securely executing tool calls

- Using sandboxed environments to control improper arguments or commands

Storage Requirements:

- Low-cost storage for conversation history, memory, and external data

- Necessity of vector databases to handle large data efficiently

Deployment Difficulties:

- More challenging than simple LLM deployment

- Additional requirements such as state management and secure tool environments

Framework Selection:

- Evaluate state/memory management and inter-agent communication features

- Choose frameworks suited to your goals and operating environment

Context Window Structure:

- Each framework provides agent state and instructions to the LLM in different formats

- Selecting a framework with transparent context management can improve controllability and performance

Multi-Agent Communication:

- Platforms like AutoGen offer explicit multi-agent abstractions

- Letta and LangGraph allow direct agent-to-agent calling

- With the right framework, it’s possible to flexibly build from single- to multi-agent setups

Azure AI Agent Service

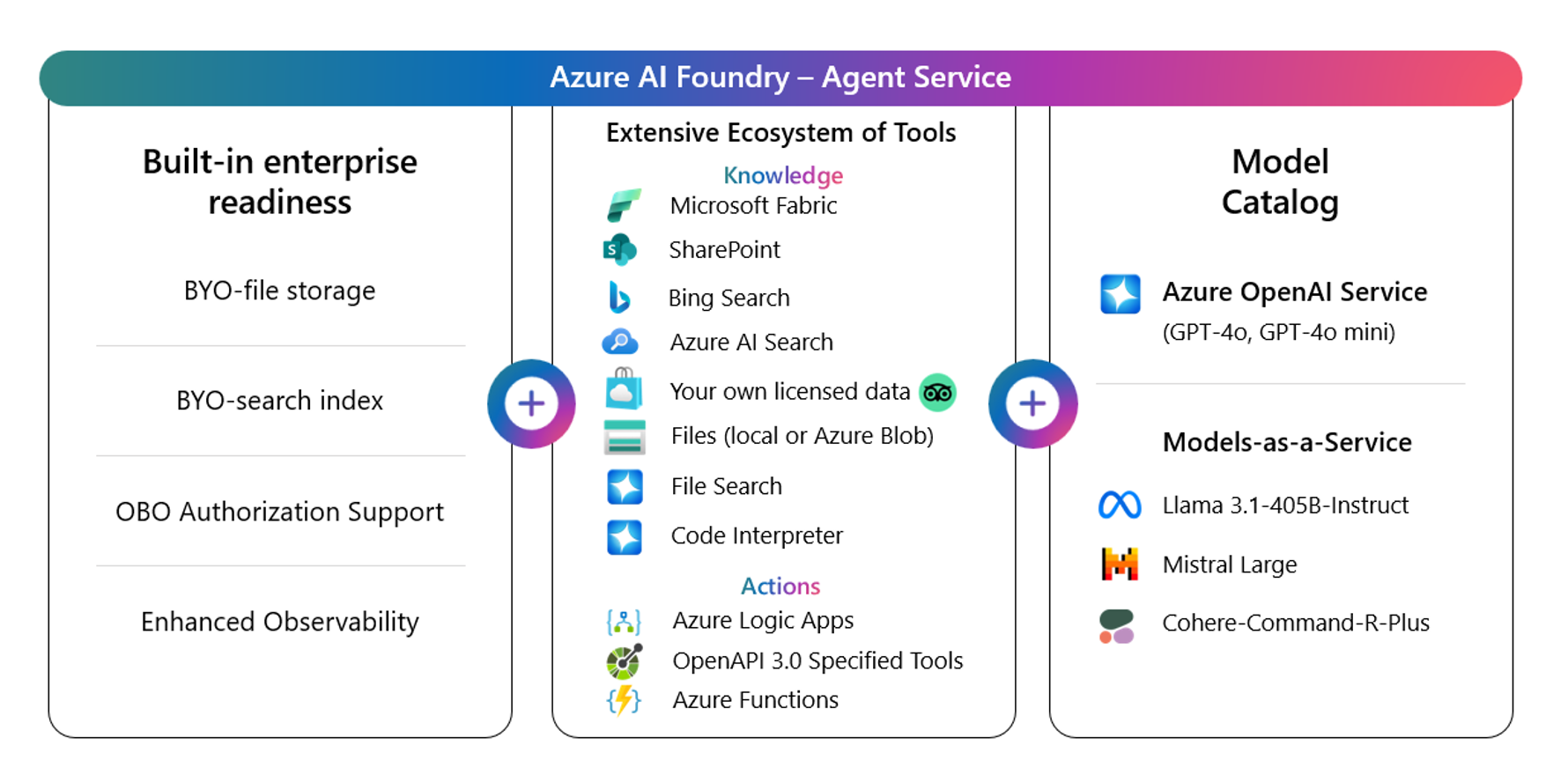

Image source: Azure AI Agent Service

Image source: Azure AI Agent Service

Azure AI Agent Service is an enterprise-grade AI agent development and deployment platform provided by Microsoft. It integrates cutting-edge models, data, tools, and services to support the automation of complex business processes.

Key Features:

- Integration of Diverse Models and Tools:

Leverage models and tools from Microsoft, OpenAI, Meta, Mistral, Cohere, and others. - Knowledge Expansion:

Incorporate information from Bing, SharePoint, Microsoft Fabric, Azure AI Search, Azure Blob, and licensed data. - Action Execution:

Perform actions within Microsoft and third-party applications via Azure Logic Apps, Azure Functions, OpenAPI 3.0 tools, and code interpreters. - Intuitive Agent Building:

Use Azure AI Foundry for intuitive agent construction. - Enterprise-Ready Features:

Utilize private storage and VPNs, delegated authentication, and OpenTelemetry-based evaluations for improved observability.

Azure AI Agent Service addresses enterprise challenges like rapid process automation, broad tool/system integration, data privacy assurance, agent cost/performance monitoring, interoperability, and scalability.

Amazon Bedrock Agents

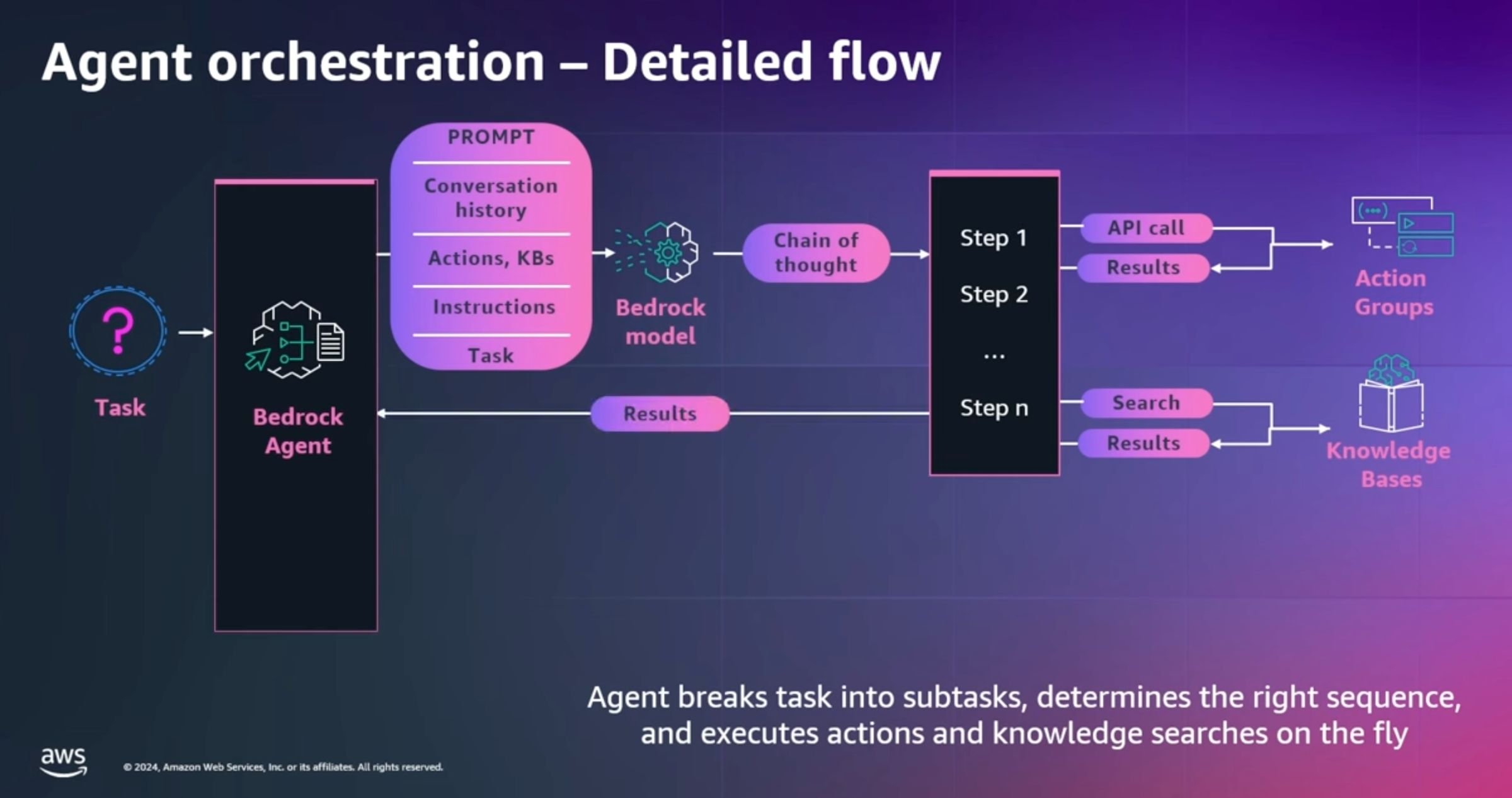

Image source: Amazon Bedrock Agents

Image source: Amazon Bedrock Agents

Amazon Bedrock Agents, offered by AWS, accelerates the development of generative AI applications. Using this service, you can create agents that break down user requests into multiple steps and automatically perform necessary API calls or data retrieval.

Key Features:

- Multi-Agent Collaboration:

Multiple specialized agents work together to handle complex workflows efficiently. - Code Interpretation:

Dynamically generate and run code for tasks like data analysis, visualization, and solving mathematical problems. - Return of Control:

Regain control when agents invoke actions, allowing implementation of backend business logic or asynchronous tasks.

These features enable rapid development of generative AI applications with Amazon Bedrock Agents.

Vertex AI Agent Builder

Image source: Vertex AI Agent Builder

Image source: Vertex AI Agent Builder

Vertex AI Agent Builder from Google Cloud simplifies the building and deployment of generative AI applications. It caters to a range of developer needs, from no-code agent-building consoles to open-source frameworks (e.g., LangChain on Vertex AI).

Key Features:

- No-Code Agent Creation:

Quickly create enterprise-grade generative AI agents using natural language. - Grounding Generative AI Outputs:

Use Vertex AI Search or RAG APIs to leverage enterprise data effectively. - Vector Search Support:

Build embedding-based agents/applications for improved accuracy and utility. - Enterprise Security and Management:

Safely deploy production-ready generative AI apps with robust security features.

Vertex AI Agent Builder allows developers to efficiently create AI agents that optimize complex business processes and improve customer experiences.

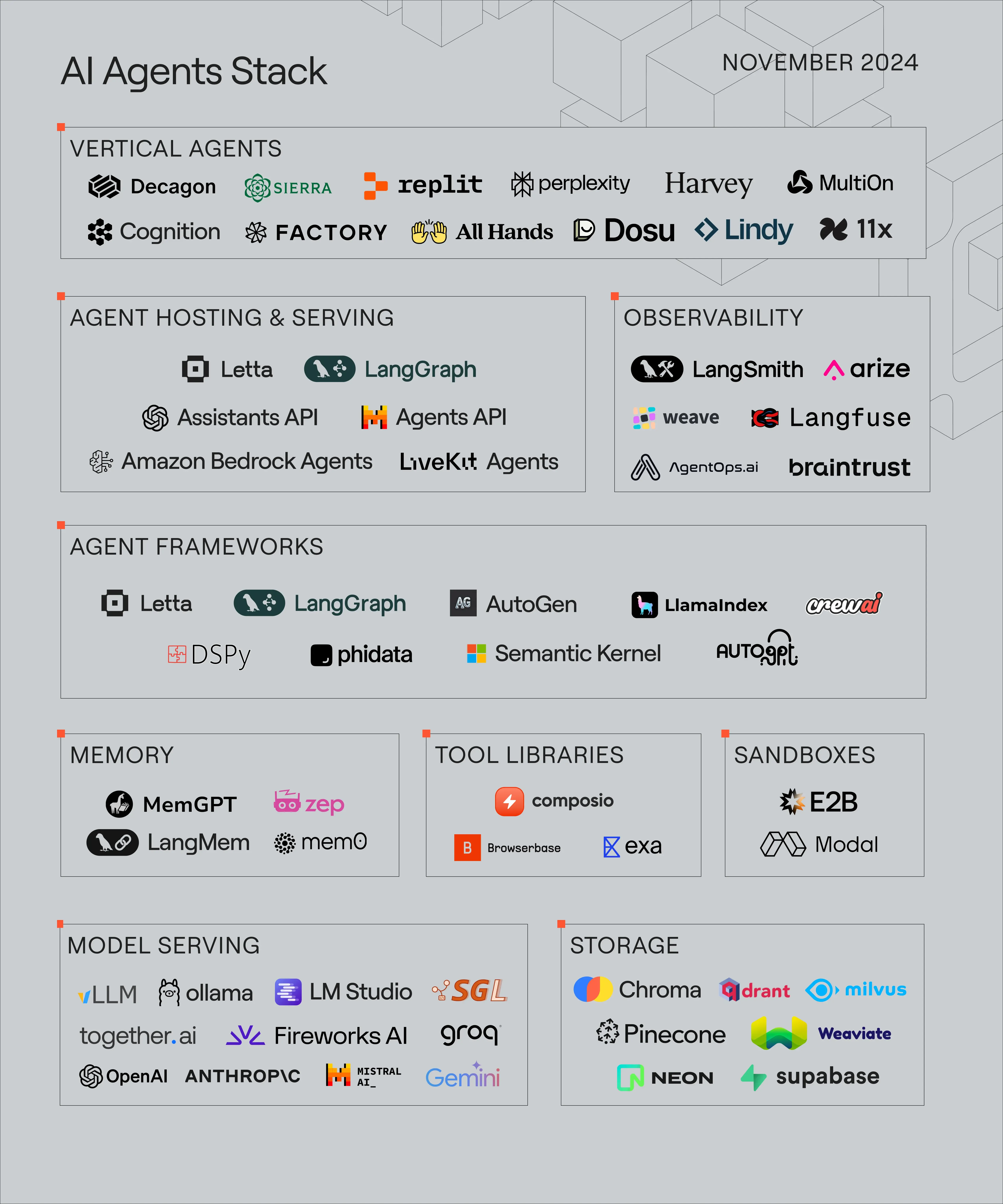

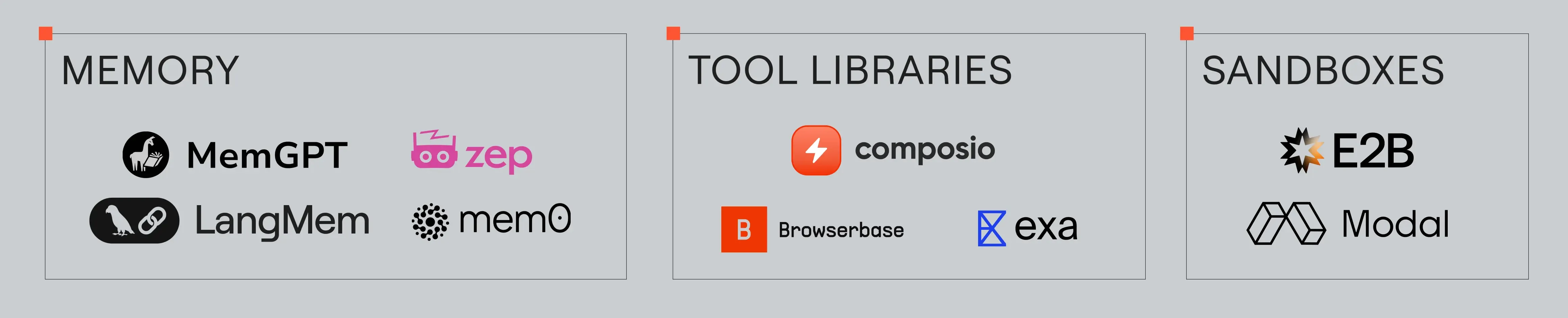

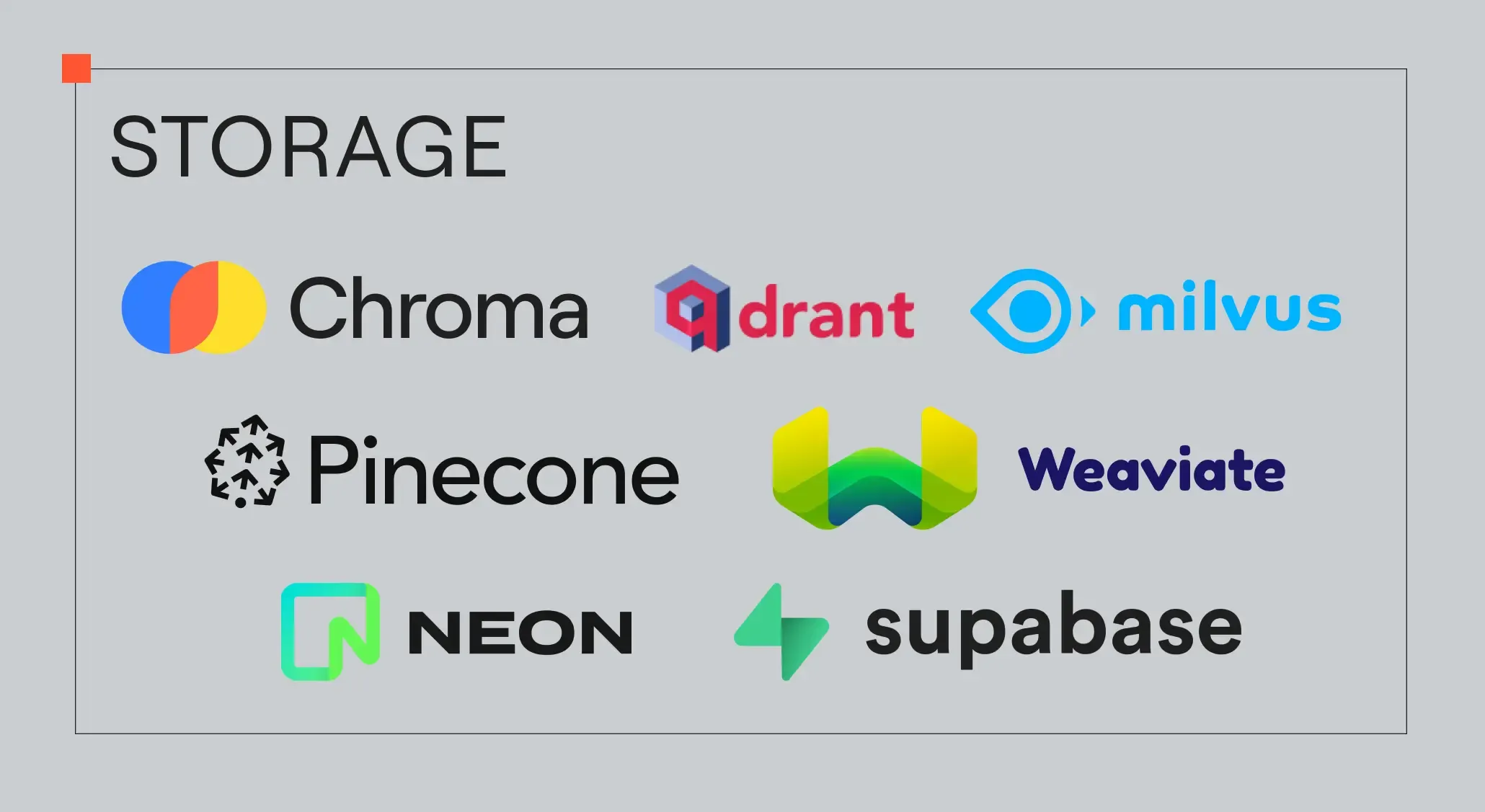

OSS and Other Services

In addition to the three cloud vendor solutions (AWS/Azure/GCP), a wide array of technologies and stacks are available to suit various use cases.

Image source: The AI agents stack

Image source: The AI agents stack

Model Serving:

Efficiently serve the LLM at an agent’s core. Options include OpenAI, Anthropic APIs, or self-hosting (e.g., vLLM, Ollama). Image source: The AI agents stack

Image source: The AI agents stackTools and Libraries:

Standardize the way agents call tools. Use OpenAI’s JSON schema for framework interoperability. Libraries like Composio and Browserbase offer common tools. Image source: The AI agents stack

Image source: The AI agents stackStorage Solutions:

Vector databases (Chroma, Qdrant, Pinecone) store external memory for agents. Image source: The AI agents stack

Image source: The AI agents stackAgent Development Frameworks:

Frameworks like LangChain, LlamaIndex, Letta each take different approaches to state/memory management and inter-agent communication. Choose one that fits your needs. Image source: The AI agents stack

Image source: The AI agents stackAgent Hosting and Serving:

Deploy agents as services via REST APIs. Address state management, secure tool execution, and other requirements with specialized hosting solutions. Image source: The AI agents stack

Image source: The AI agents stack

Challenges and Solutions in AI Agent Implementation

While we introduced various tools and services for AI Agent development, additional concepts and techniques can help overcome core challenges. In particular, enabling agents to make decisions (Planning) and take actions (Action) are key hurdles.

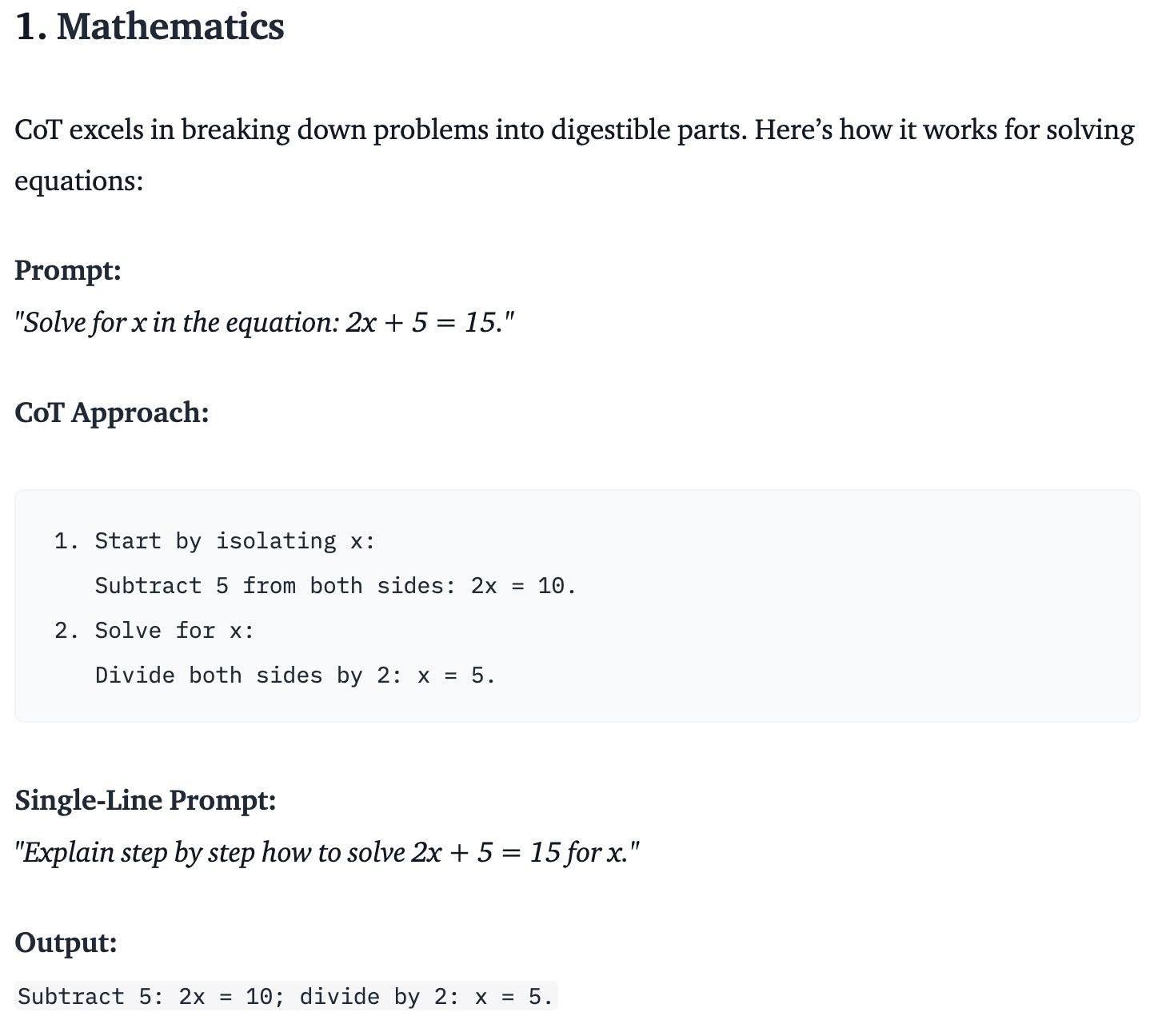

Chain-of-Thought (CoT)

Chain-of-Thought (CoT) is a prompt engineering technique that guides AI agents to break down complex tasks into step-by-step reasoning. This approach yields more accurate and consistent results.

Image source: Mastering Chain of Thought (CoT) Prompting for Practical AI Tasks

Image source: Mastering Chain of Thought (CoT) Prompting for Practical AI Tasks

- Without CoT, models might give one-shot answers without intermediate reasoning.

- With CoT, you encourage the model to “think” step-by-step, useful for tasks like math problems or multi-step reasoning.

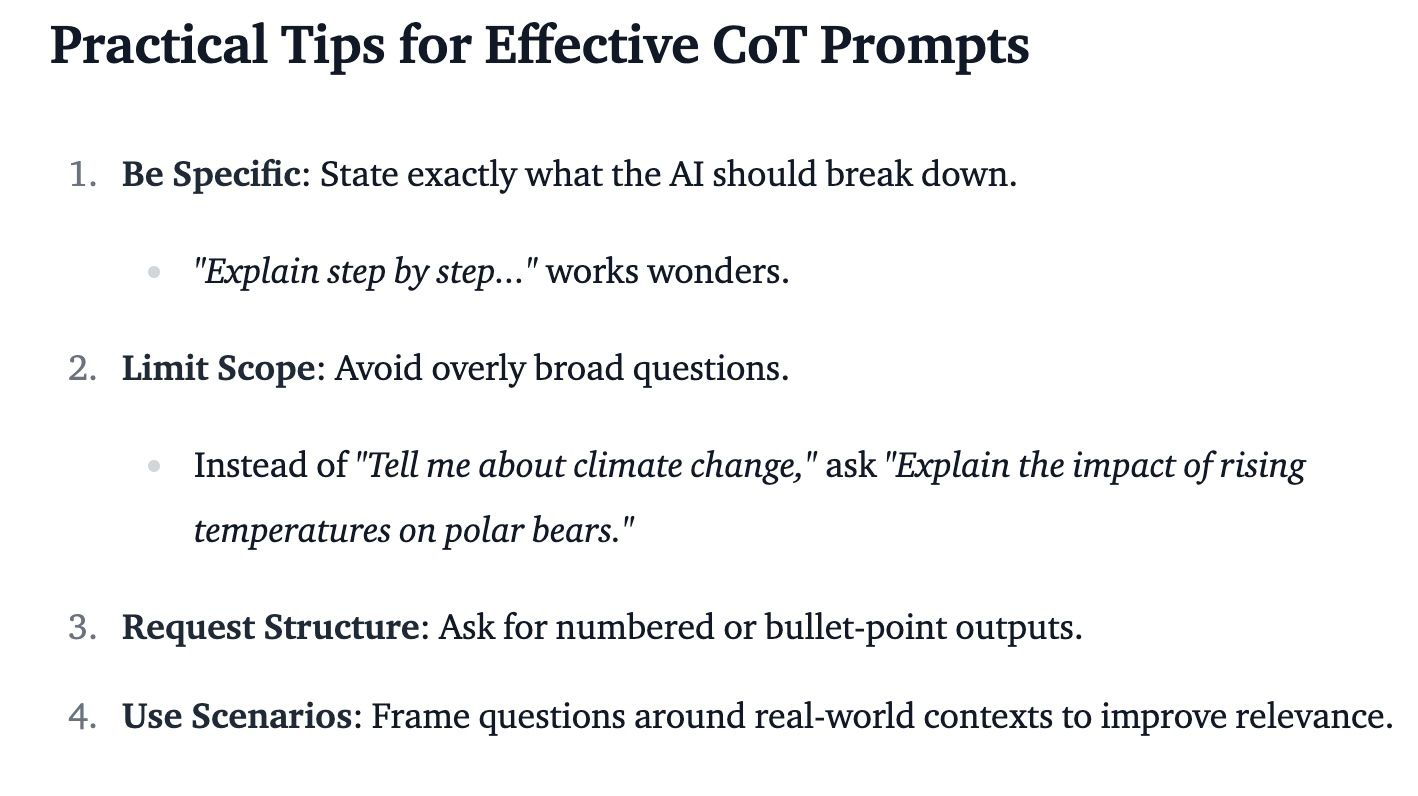

Best Practices for CoT Prompting:

- Be Specific:

Clearly tell the AI what to break down. “Explain step-by-step” works well. - Limit Scope:

Avoid overly broad questions. Instead of “Tell me about climate change,” ask “Explain the impact of rising temperatures on polar bears.” - Request Structure:

Ask for bullet lists or numbered outputs for clarity. - Use Scenarios:

Provide real-world contexts to improve relevance. Image source: Mastering Chain of Thought (CoT) Prompting for Practical AI Tasks

Image source: Mastering Chain of Thought (CoT) Prompting for Practical AI Tasks

Large Action Models (LAM)

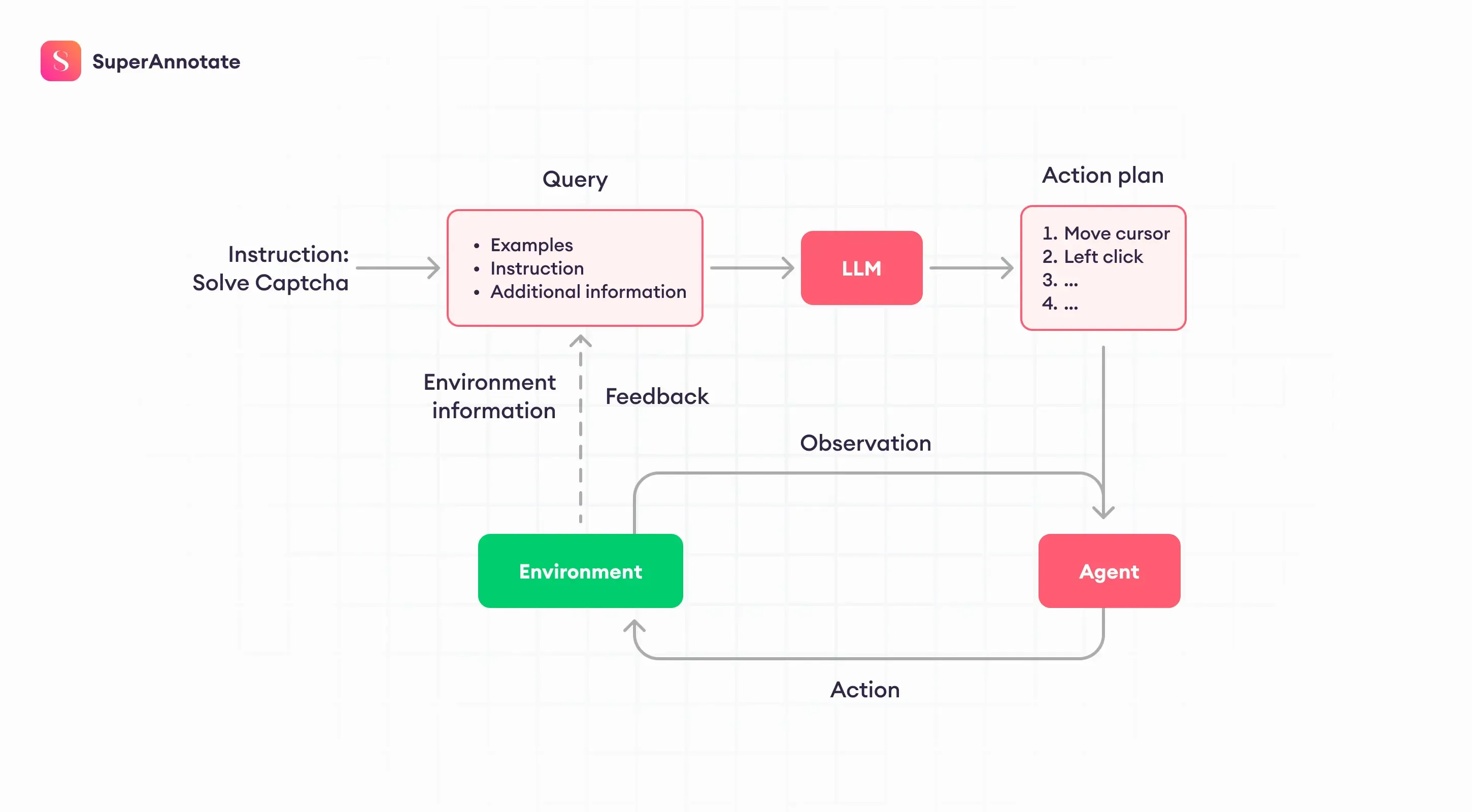

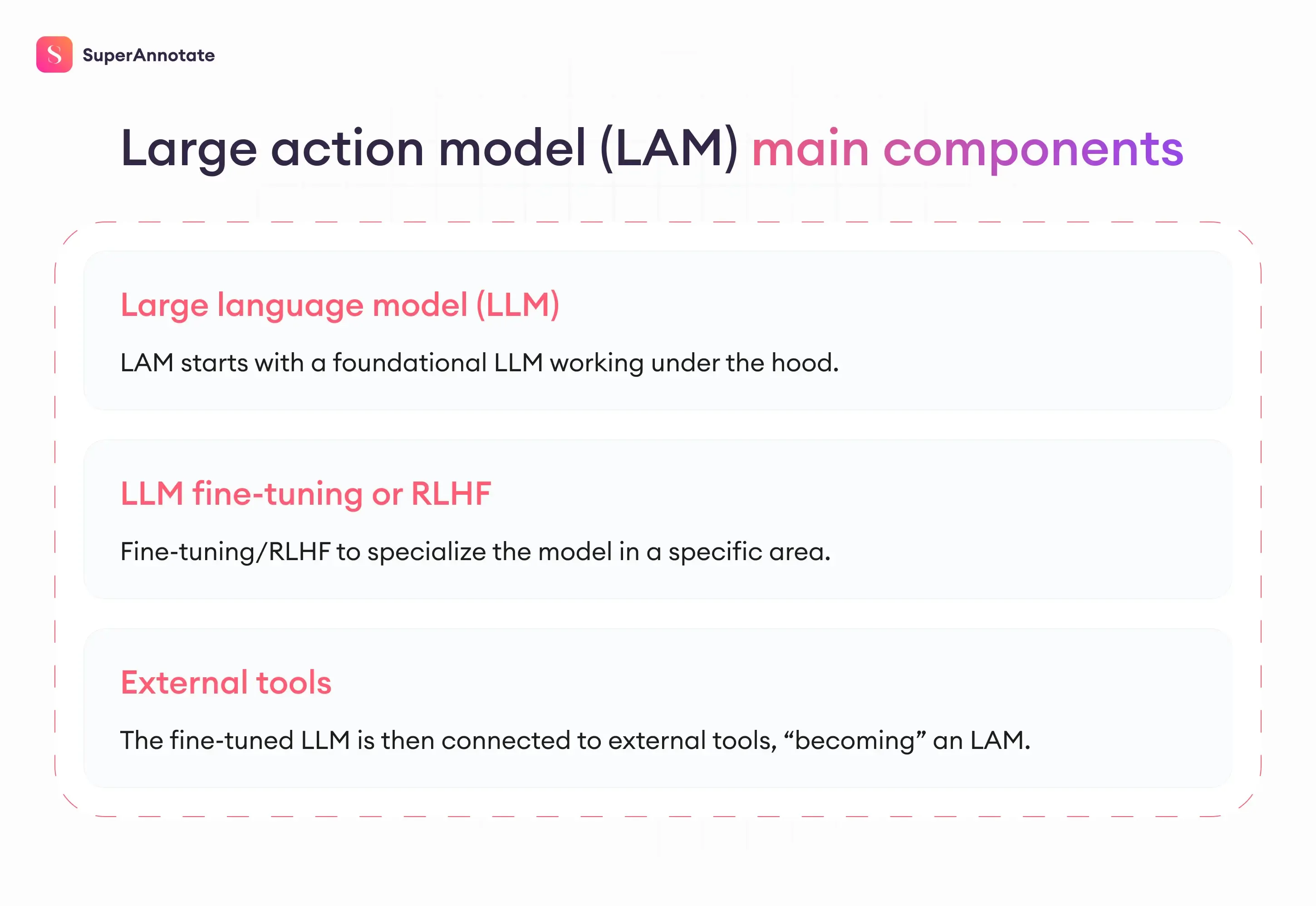

Large Action Models (LAMs) integrate decision-making abilities into LLMs, allowing them to understand human intentions and autonomously execute complex tasks.

Image source: Large action models (LAMs): The foundation of AI agents

Image source: Large action models (LAMs): The foundation of AI agents

Creating LAMs from LLMs:

- Fine-Tuning and Alignment:

Specialize LLMs for specific domains via fine-tuning and alignment methods (RLHF, RLAIF, DPO). Optionally incorporate multimodal data (text, images, audio).- RLHF:Reinforcement Learning from Human Feedback

- RLAIF:Reinforcement Learning from AI Feedback

- DPO:Direct Preference Optimization

- Tool Integration:

Connect the fine-tuned LLM to external tools to evolve it into a LAM capable of autonomous action. Image source: Large action models (LAMs): The foundation of AI agents

Image source: Large action models (LAMs): The foundation of AI agents

“The main talk about LAMs started with Rabbit AI’s release of R1, but there are a few other players in the game. In particular, the recent release of Anthropic’s Claude features shook the AI community with what’s possible in agentic AI.” Large action models (LAMs): The foundation of AI agents

Tools for Enabling Actions

A major challenge is enabling AI agents to perform actions (e.g., calling APIs, running scripts). Cloud vendors allow this within their ecosystems, while external environments may use offerings from OpenAI, Anthropic, and others.

OpenAI macOS Library:

Enables action outside cloud ecosystems. Find more details from here.Anthropic’s Model Context Protocol (MCP):

A unified protocol that replaces complex integrations.

Allows AI systems to fetch real-time info from various data sources. Open-sourced to foster ecosystem growth. Check the MCP’s GitHub repository for source code, supported services, and tools.

Conclusion

AI Agents represent the evolution of AI from information tools to intelligent partners capable of autonomous decision-making and action. They can handle complex decision-making, task decomposition, and external tool utilization, proving valuable in both business and everyday applications.

However, challenges remain in real-world deployment, including reliability, safety, error control, and explainability. As related technologies and frameworks advance, AI Agents are expected to become more accessible, reliable, and instrumental in creating new sources of value.

References

- Agentic Design Patterns Part 1

- What is Agentic RAG? Building Agents with Qdrant

- The AI agents stack

- AI同士が自律的にタスクを遂行!AIマルチエージェントとは

- Microsoft’s GraphRAG + AutoGen + Ollama + Chainlit = Local & Free Multi-Agent RAG Superbot

- The Leading Multi-Agent Platform

- How Toyota uses Azure Cosmos DB to power their multi-agent AI system for enhanced productivity

- Balance agent control with agency

- Microsoft Tech Community

- Introducing the Model Context Protocol

- Large Action Models (LAMs): The Next Frontier in AI-Powered Interaction

- Large action models (LAMs): The foundation of AI agents

- AI and the Evolving Consumer Device Ecosystem

- Github: Virtual Lab

- “The Virtual Lab: AI Agents Design New SARS-CoV-2 Nanobodies with Experimental Validation,” Kyle Swanson et al. (2024)

- “Self-Refine: Iterative Refinement with Self-Feedback,” Madaan et al. (2023)

- “Reflexion: Language Agents with Verbal Reinforcement Learning,” Shinn et al. (2023)

- “CRITIC: Large Language Models Can Self-Correct with Tool-Interactive Critiquing,” Gou et al. (2024)

- “Gorilla: Large Language Model Connected with Massive APIs,” Patil et al. (2023)

- “MM-REACT: Prompting ChatGPT for Multimodal Reasoning and Action,” Yang et al. (2023)

- “Efficient Tool Use with Chain-of-Abstraction Reasoning,” Gao et al. (2024)

- “Chain-of-Thought Prompting Elicits Reasoning in Large Language Models,” Wei et al. (2022)

- “HuggingGPT: Solving AI Tasks with ChatGPT and its Friends in Hugging Face,” Shen et al. (2023)

- “Understanding the planning of LLM agents: A survey,” by Huang et al. (2024)

- “Communicative Agents for Software Development,” Qian et al. (2023) (the ChatDev paper)

- “AutoGen: Enabling Next-Gen LLM Applications via Multi-Agent Conversation,” Wu et al. (2023)

- “MetaGPT: Meta Programming for a Multi-Agent Collaborative Framework,” Hong et al. (2023)

- "Agent AI: Surveying the Horizons of Multimodal Interaction" by Zane Durante et al. This paper explores the integration of AI agents within physical and virtual environments, emphasizing their ability to process multimodal data and perform context-aware actions. arXiv

- "An In-depth Survey of Large Language Model-based Artificial Intelligence Agents" by Pengyu Zhao, Zijian Jin, and Ning Cheng. The authors analyze the evolution of AI agents powered by large language models, discussing their capabilities, challenges, and future directions. arXiv

- "Levels of AI Agents: from Rules to Large Language Models" by Yu Huang. This work categorizes AI agents based on their complexity and functionality, ranging from rule-based systems to those utilizing large language models, providing a framework for understanding their development. arXiv

- "Visibility into AI Agents" by various authors. The paper addresses the importance of transparency and accountability in AI agents, proposing methods to enhance their interpretability and trustworthiness. arXiv