Tips for Writing Effective Prompts

Introduction

As large language model (LLM) technology rapidly advances, the importance of prompt engineering is becoming increasingly evident. A prompt is essentially an input instruction given to AI, and it determines the kind of response the AI will generate. While LLMs are incredibly powerful and capable of handling a wide range of tasks, without well-crafted prompts, it's challenging to fully harness their potential. In this blog, we’ve compiled some tips on how to write effective prompts.

In the course of our research and development using LLMs, we came across the official documentation of Anthropic, an AI startup known for developing Claude, an LLM. Their documentation is very insightful, offering prompt engineering tips that can be applied not only to Claude but to any LLM. We’ve used this as a reference for our discussion.

What Happens When a Prompt is Poorly Constructed?

What issues arise from poorly written prompts? And why do these issues occur? Let’s briefly explore this. Poor quality prompts can lead to the following problems:

- Ambiguity: Ambiguous prompts make it difficult for AI to respond as intended.

- Bias: Inappropriate prompts may unintentionally introduce bias into the results.

- Inaccurate Responses: Prompts based on incorrect information or inappropriate context can generate responses that are factually incorrect.

- Suppression of Creativity: Overly detailed prompts can restrict the AI's ability to provide creative responses.

- Overgeneralization: Prompts that lack sufficient detail may result in generic, less specific responses.

The reason behind these issues is that LLMs generate text by predicting the next word or sentence based on the previous ones. This means that they are simply generating sentences based on the data (large amounts of text, etc.) they were trained on. Therefore, providing clear and detailed input is essential to reducing the likelihood of errors.

What Makes a Good Prompt?

A good prompt is one that is designed to enable the AI to generate the expected response effectively. The following characteristics define a good prompt:

- Clarity: The prompt should be clear and unambiguous.

- Specificity: The prompt should include the necessary detailed information.

- Relevance: The prompt should be highly relevant to the request.

- Context Provision: The prompt should provide the necessary background information and conditions.

What Are the Specific Requirements for a Good Prompt?

When writing a prompt, it’s important to be mindful of the following elements. While it’s not mandatory to include all of them, you should tailor these elements to the task you want the LLM to perform.

- Task Context: Provide the LLM with a role or persona to help it understand the task at hand, which prevents it from going off track.

- Tone Specification: Specify the tone of the conversation. This allows you to control the choice of words to some extent.

- Background Data (Documents and Images): Also known as context, provide all necessary information for the LLM to complete the task. This maintains the specificity of the task and enhances its quality.

- Detailed Task Description and Rules: Add detailed rules or instructions regarding interaction with the user. This helps prevent errors such as hallucinations.

- Examples: Provide examples of the desired output to guide the LLM.

- Conversation History: If there’s any past interaction between the user and the LLM, provide it to the LLM to ensure a seamless continuation of the conversation. Many LLM cloud services like ChatGPT have this functionality by default.

- Immediate Task Description or Request: Give clear instructions for any sub-tasks derived from the assigned role or task.

- Think Step-by-Step: If necessary, ask the LLM to take its time or think step-by-step. Sometimes it’s effective to have the LLM handle tasks carefully and methodically, rather than all at once.

- Output Formatting: If the format of the output is crucial, for example, if the output is expected to be in JSON or Markdown format, provide detailed instructions regarding the format.

- Prefill: If the LLM is prone to making mistakes, provide a prefilled example along with the instruction to generate the continuation of a given section.

Examples of Good Prompts

Here are some techniques you can use when writing prompts.

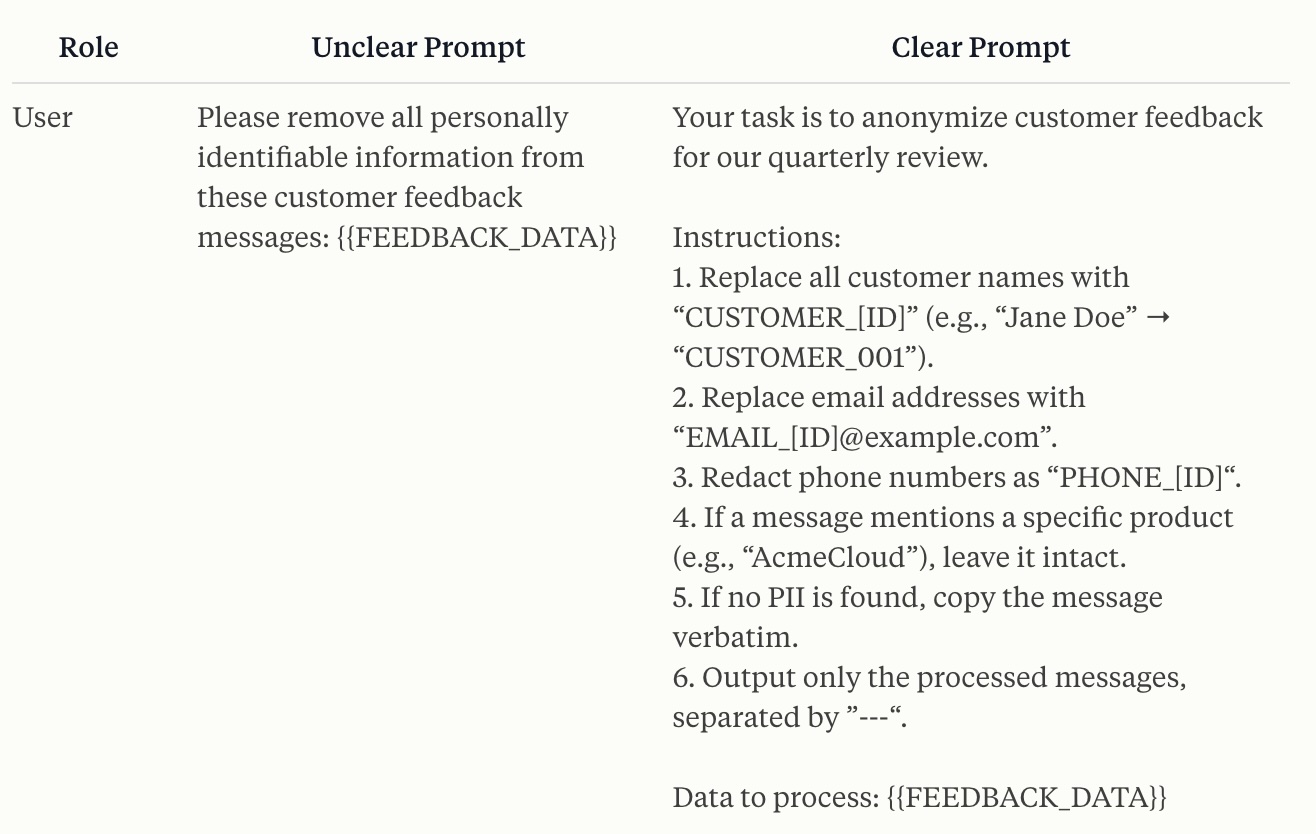

For instance, when providing task context, it’s important to clearly specify the following:

- What the task results will be used for?

- What audience the output is meant for?

- What workflow the task is a part of, and where this task belongs in that workflow?

- The end goal of the task, or what a successful task completion looks like?

Image source: Anthropic

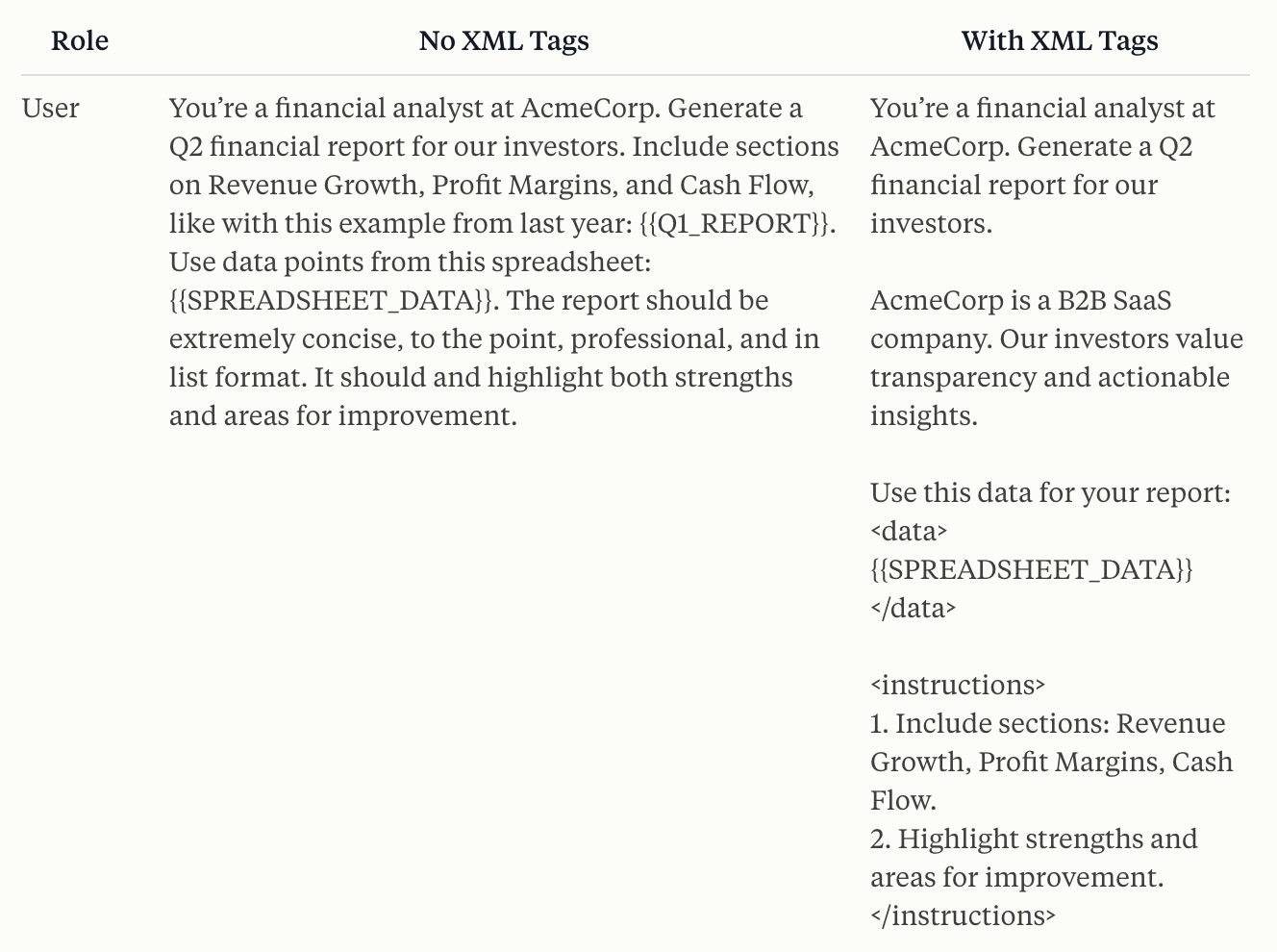

Image source: Anthropic

Using XML tags is also an effective technique with the following advantages:

- Clarity: Clearly separate different parts of your prompt and ensure your prompt is well-structured.

- Accuracy: Reduce errors caused by the LLM misinterpreting parts of your prompt.

- Parsability: Having the LLM use XML tags in its output makes it easier to extract specific parts of its response by post-processing.

For example, wrapping examples in

tags (if multiple, nested within tags) can help clarify structure.

Image source: Anthropic

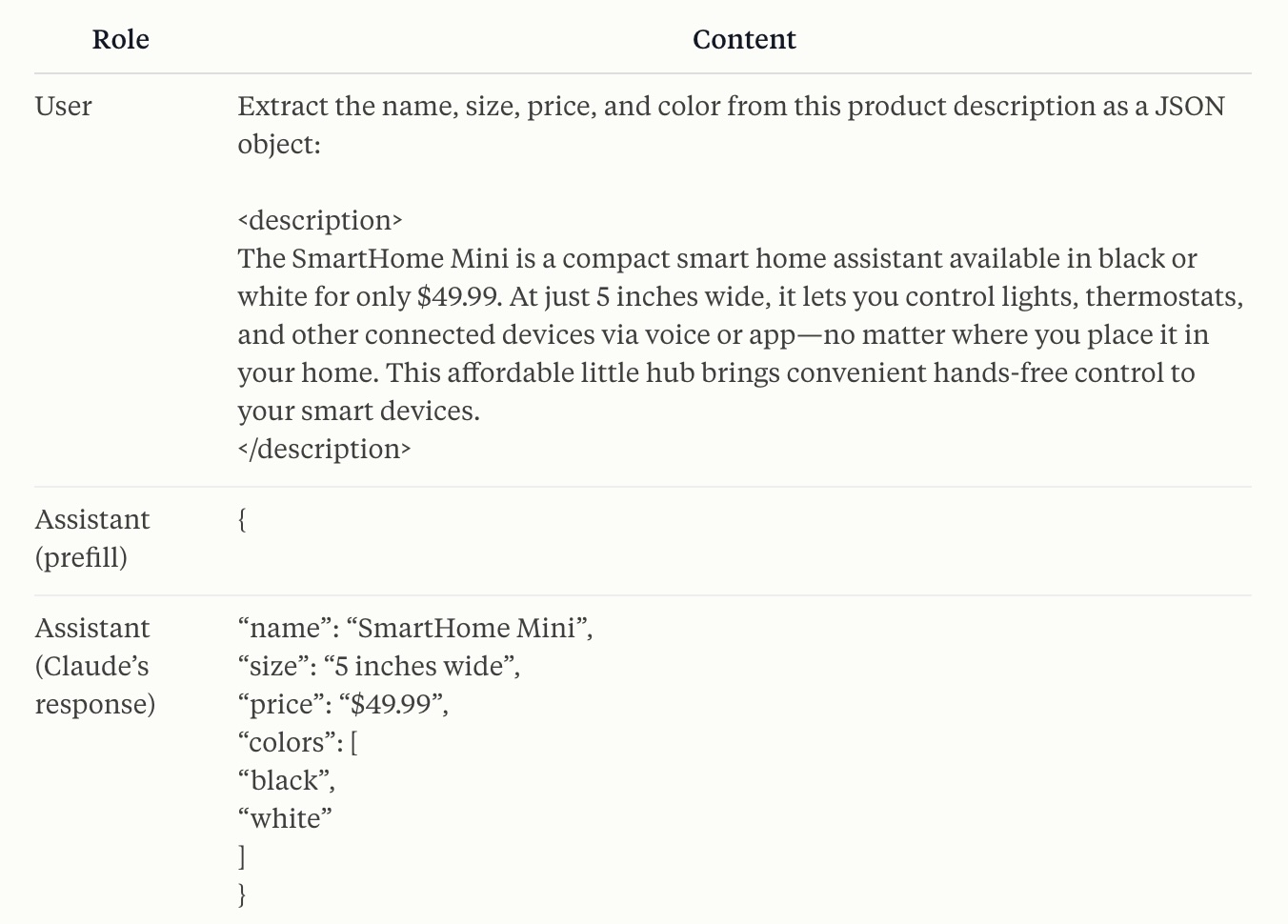

Image source: Anthropic

Another useful technique is to prefill part of the output if you want to receive it in JSON format. By doing so, you can prevent errors. By prefilling { at the beginning of the output, you can skip the preamble and have the LLM directly output the JSON object. This makes the output cleaner, more concise, and easier for programs to parse without additional processing.

Image source: Anthropic

Image source: Anthropic

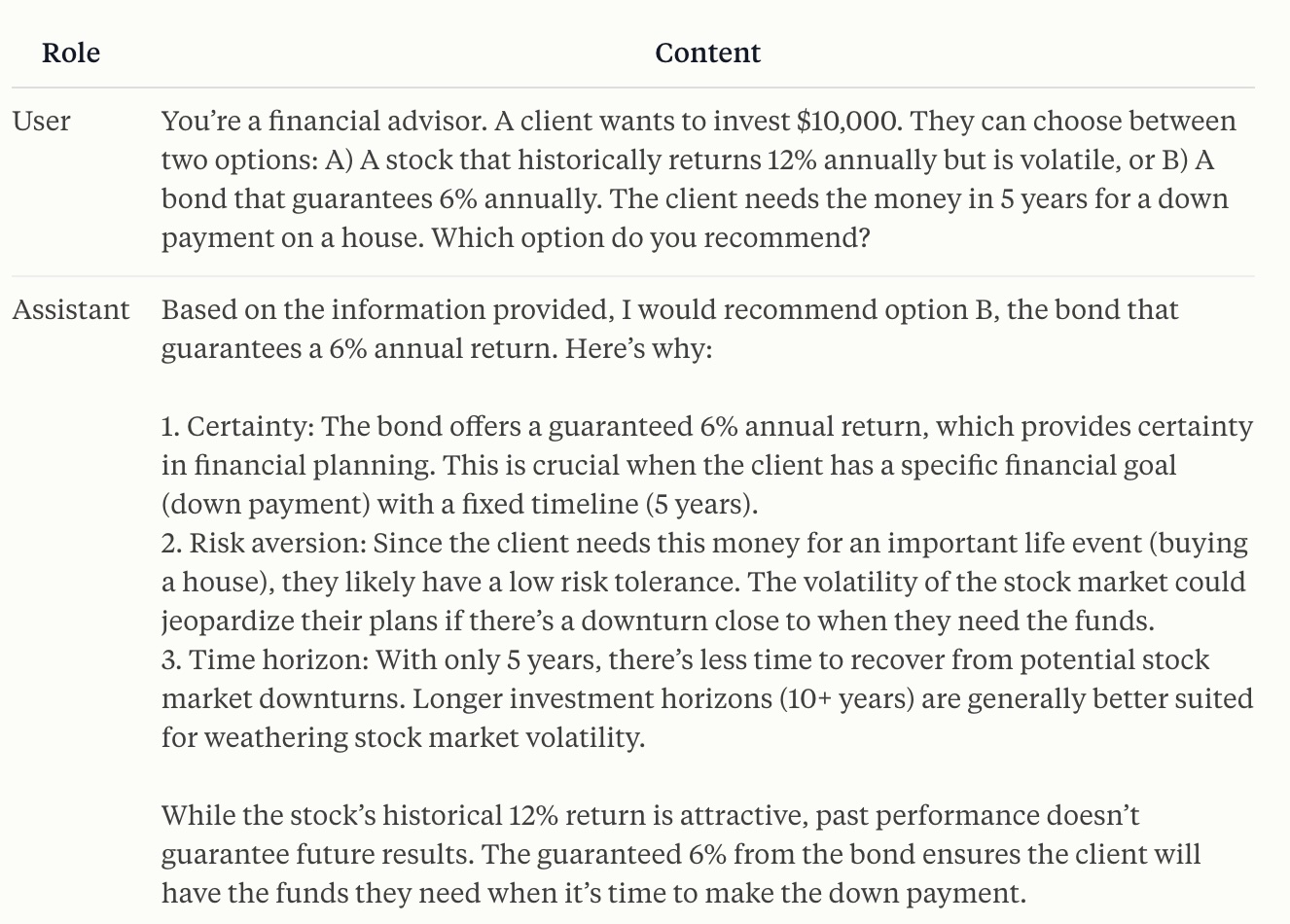

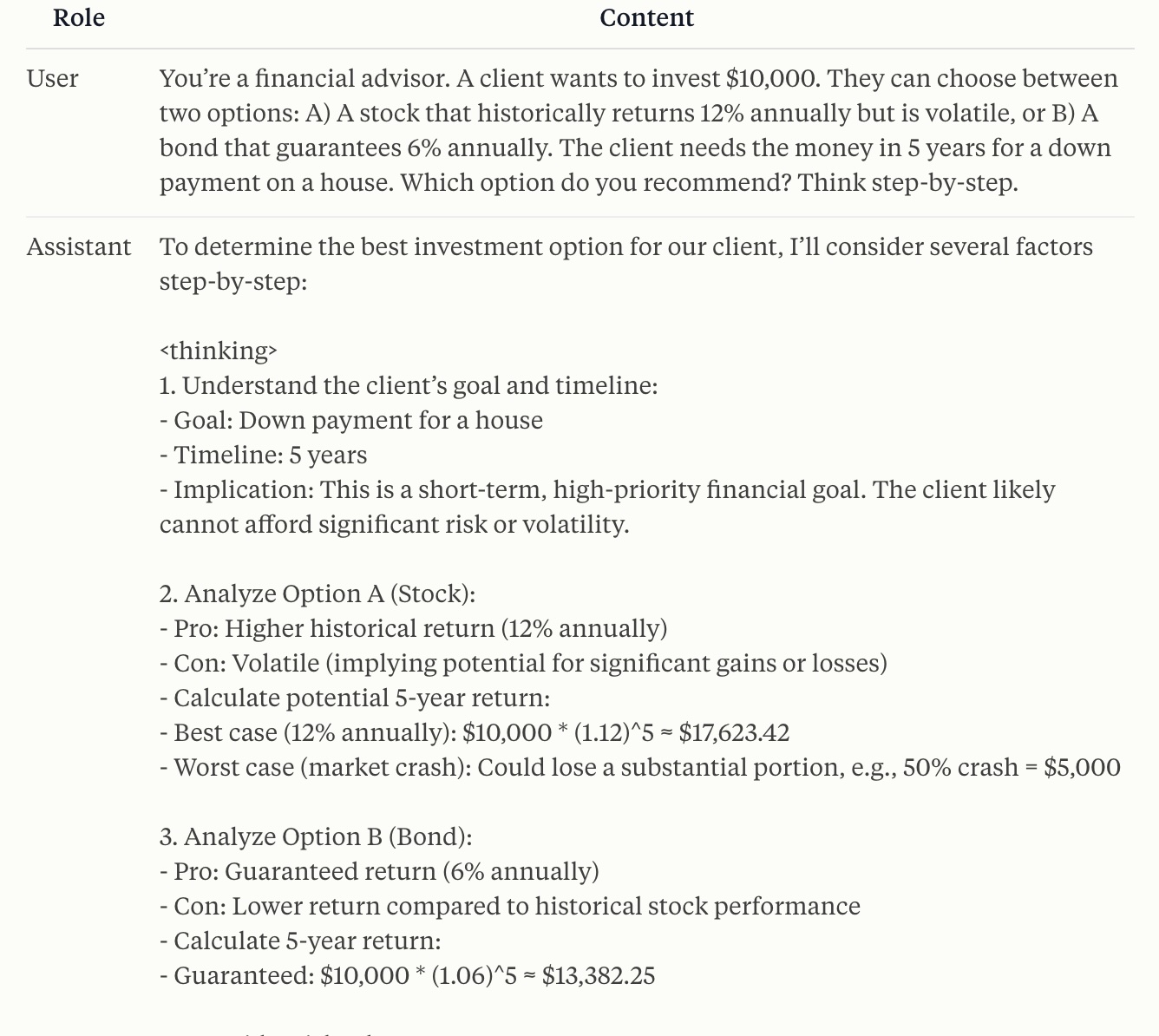

Asking the LLM to think step-by-step is also an effective technique. It’s very simple—just include "Think step-by-step" in your basic prompt. Then, in a guided custom prompt, outline specific steps for the LLM to follow in its thinking process.

Before - Prompt Tuning:

Image source: Anthropic

Image source: Anthropic

After - Prompt Tuning:

Image source: Anthropic

Image source: Anthropic

Conclusion

Prompt engineering is a critical skill for maximizing the capabilities of LLMs. By designing prompts that are clear, specific, and relevant, you can obtain the useful and expected responses from AI. In this blog, we introduced the key requirements for good prompts along with some practical examples. As LLMs become increasingly integrated into our daily lives, now is the perfect time to learn how to write effective prompts.