Vector Search Engine: A Hands-On Example

📌 Implementing a Vector Search Engine with Qdrant Cloud

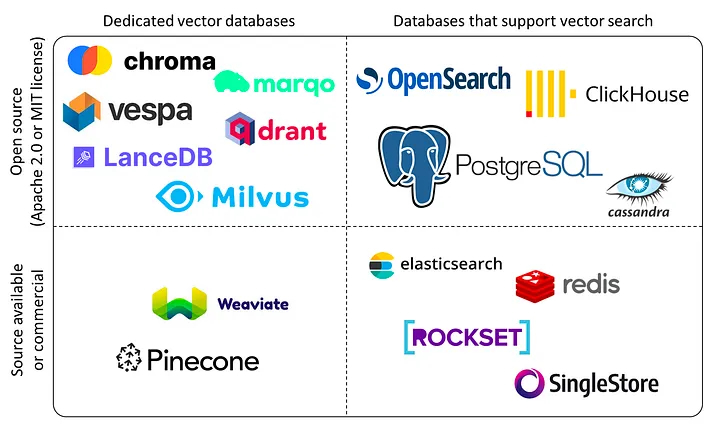

What is a Vector Database and Why is it Gaining Attention?

A vector database is a type of database that stores data as vectors instead of traditional rows and columns. Vectors are numerical representations of data points, which can capture the essence of complex data such as images, texts, and even audio. This capability makes vector databases particularly useful in applications requiring similarity searches, which are common in fields like machine learning, natural language processing, and computer vision.

Image source: Why You Shouldn’t Invest In Vector Databases?

Image source: Why You Shouldn’t Invest In Vector Databases?

The surge in interest in vector databases is driven by the rapid advancements in AI and machine learning. Traditional databases struggle to manage and search through high-dimensional data effectively. Vector databases, on the other hand, are designed to handle such data with ease, enabling efficient and accurate similarity searches. This makes them invaluable for modern applications such as recommendation systems, image and video search, and natural language understanding.

What is Vector Search and Why is it Necessary?

Vector search involves finding vectors in a high-dimensional space that are similar to a given query vector. This is essential in many AI applications. For instance, in a recommendation system, vector search can quickly identify items similar to those a user has previously interacted with. In image search, it can find visually similar images, and in natural language processing, it can retrieve semantically similar texts.

The necessity of vector search arises from the limitations of exact matching methods in handling unstructured and high-dimensional data. Exact matching can miss relevant items that are not identical but similar in some aspects. Vector search addresses this by enabling similarity-based retrieval, which is more aligned with how humans perceive similarities.

What is Qdrant and Why Do We Need It?

Qdrant is an advanced vector database that provides efficient and accurate similarity search capabilities. It leverages the power of vectors to manage high-dimensional data, making it an essential tool for applications involving machine learning and artificial intelligence.

Image source: social-preview-H.png (1280×640) (qdrant.tech)

Image source: social-preview-H.png (1280×640) (qdrant.tech)

Why Qdrant is Necessary

In an era where data is growing exponentially and the need for fast, accurate search results is critical, Qdrant fills a crucial gap. Traditional databases are not optimized for the types of high-dimensional data used in AI and ML applications. Qdrant offers a dedicated platform for storing and querying vector data, addressing these specific needs.

Technical Features of Qdrant

- High-Performance Similarity Search: Qdrant is optimized for speed and accuracy, using advanced algorithms to quickly find the most similar vectors in large datasets.

- Scalability: Designed to scale with your needs, Qdrant can handle millions to billions of vectors without compromising performance.

- Easy Integration: Qdrant integrates seamlessly with popular machine learning frameworks and libraries, simplifying its adoption into existing workflows.

- Real-Time Updates: Supports real-time updates, allowing for the addition, deletion, or modification of vectors on the fly without affecting search performance.

- Rich API: Provides a comprehensive API for programmatic interaction, facilitating automation and integration.

Comparing Qdrant with Other Vector Databases

Advantages of Qdrant

- Performance: Qdrant outperforms many other vector databases in search speed and accuracy. Its optimized algorithms and data structures ensure fast query times even with large datasets.

- User-Friendly: Qdrant's intuitive interface and robust API make it accessible to developers of all skill levels. The seamless integration with machine learning frameworks further enhances its usability.

- Scalability: Unlike some vector databases that struggle with large datasets, Qdrant is designed to scale efficiently, handling vast amounts of data without sacrificing performance.

- Real-Time Capabilities: The ability to update vectors in real time is a significant advantage, especially for applications requiring constant data updates and immediate query results.

Disadvantages of Qdrant

- Resource Intensive: Due to its high-performance capabilities, Qdrant can be resource-intensive, requiring more powerful hardware and infrastructure compared to simpler databases.

- Complexity: For users unfamiliar with vector databases, the initial learning curve can be steep. Understanding vector representations and similarity search algorithms is crucial to fully leverage Qdrant's capabilities.

Sample Code for Using Qdrant Cloud

Below are code blocks that will demonstrate how to build a vector search engine using Qdrant Cloud. The code will include sections for importing embedding data, building an API with FastAPI, and implementing the search engine, along with the necessary package list.

Embedding Data Import (load.py)

import json

import logging

import os

import time

import pandas as pd

from qdrant_client import QdrantClient

from qdrant_client.http import models as rest

from qdrant_client.conversions import common_types as types

from ast import literal_eval

from .consts import COLLECTION_NAME, EMBEDDING_DATA

logger = logging.getLogger()

logger.setLevel(logging.INFO)

consoleHandler = logging.StreamHandler()

logger.addHandler(consoleHandler)

logger.setLevel(logging.INFO)

qdrant_host = os.environ["QDRANT_HOST"]

qdrant_api_key = os.environ["QDRANT_API_KEY"]

CHUNK_SIZE = 500

def load_embeddings_in_chunks(

q_client: QdrantClient, file_path: str, chunk_size=CHUNK_SIZE

):

logger.info(f"Processing {file_path}")

# create collection if not exists

collections: types.CollectionsResponse = q_client.get_collections()

collections_names = [col.name for col in collections.collections]

logger.info(f"Existing collections: {collections_names}")

with open(file_path, "r") as file:

vector_size = 0

count_rows = 0

for _, df_chunk in enumerate(pd.read_csv(file, chunksize=chunk_size)):

df_chunk["embeddings"] = df_chunk["embeddings"].apply(literal_eval)

if vector_size == 0:

vector_size = len(df_chunk.iloc[0]["embeddings"])

logger.info(f"vector_size: {vector_size}")

# create collection if not exists

if COLLECTION_NAME not in collections_names:

q_client.recreate_collection(

collection_name=COLLECTION_NAME,

vectors_config={

"content": rest.VectorParams(

distance=rest.Distance.COSINE,

size=vector_size,

),

},

)

logger.info(f"Created collection: {COLLECTION_NAME}")

collections: types.CollectionsResponse = q_client.get_collections()

collections_names = [col.name for col in collections.collections]

points = [

rest.PointStruct(

id=row["id"],

vector={

"content": row["embeddings"],

},

payload=json.loads(row["properties"]),

)

for _, row in df_chunk.iterrows()

]

res: types.UpdateResult = q_client.upsert(

collection_name=COLLECTION_NAME,

points=points,

wait=True,

)

logger.info(f"Upserted chunk to collection: {res}")

count_rows += len(points)

logger.info(f"Loaded {count_rows} rows collection.")

def load_to_db(q_client: QdrantClient):

start_time = time.time()

logger.info("Loading embeddings to db")

# Assuming EMBEDDING_DATA contains file paths for the embeddings

file_path = f"./embeddings/embedding1.csv"

load_embeddings_in_chunks(q_client, file_path)

file_path = f"./embeddings/embedding2.csv"

load_embeddings_in_chunks(q_client, file_path)

file_path = f"./embeddings/embedding3.csv"

load_embeddings_in_chunks(q_client, file_path)

logger.info(

f"Loaded embeddings to db. Spent time: {str(time.time() - start_time)} seconds"

)

def main():

qdrant_client = QdrantClient(qdrant_host, api_key=qdrant_api_key)

load_to_db(qdrant_client)

if __name__ == "__main__":

main()

Building the API with FastAPI (main.py)

import logging

import os

from fastapi import FastAPI, HTTPException

from .search_engine import search_query, q_client, vector_size

if os.getenv("OPENAI_API_KEY", "") == "":

raise Exception("OPENAI_API_KEY is not set")

# setting log go into stdout

logger = logging.getLogger()

logger.setLevel(logging.INFO)

consoleHandler = logging.StreamHandler()

logger.addHandler(consoleHandler)

logger.setLevel(logging.INFO)

app = FastAPI()

@app.get("/health")

def health():

return {"status": "ok"}

@app.get("/count")

def db_size():

count = vector_size()

return {"count": count}

@app.get("/search")

def search(query: str, top_k: int = 20):

try:

result = search_query(query, top_k=top_k)

except ValueError as e:

raise HTTPException(status_code=404, detail="Item not found")

return result

if __name__ == "__main__":

# for test purpose

import uvicorn

uvicorn.run(app, host="0.0.0.0", port=8080)

Search Engine Implementation (search_engine.py)

import logging

import os

from time import time

import openai

import qdrant_client

from qdrant_client.conversions.common_types import CountResult

from .consts import COLLECTION_NAME

logger = logging.getLogger()

qdrant_host = os.getenv("QDRANT_HOST", None)

qdrant_api_key = os.getenv("QDRANT_API_KEY", None)

# Qdrant Cloud

if qdrant_host and qdrant_api_key:

q_client = qdrant_client.QdrantClient(

qdrant_host,

api_key=qdrant_api_key,

)

logger.info(f"Qdrant client running on Cloud: {qdrant_host}")

else:

q_client = qdrant_client.QdrantClient(":memory:")

logger.info(f"Qdrant client running on local machine.")

def vector_size():

try:

res: CountResult = q_client.count(collection_name=COLLECTION_NAME)

return res.count

except Exception as e:

logger.error(f"Error when counting: {e}")

return 0

def search_query(query: str, vector_name: str = "content", top_k: int = 20):

embds_start = time()

embedded_query = openai.Embedding.create(

input=query,

model="text-embedding-ada-002",

)["data"][0]["embedding"]

embds_end = time()

embds_time = embds_end - embds_start

logger.info(f"Embedding time: {embds_time}, query: {query}")

query_start = time()

query_results = q_client.search(

collection_name=COLLECTION_NAME,

query_vector=(vector_name, embedded_query),

limit=top_k,

)

query_end = time()

query_time = query_end - query_start

return {

"result": query_results,

"embedding_time": embds_time,

"query_time": query_time,

}

Constant Variables (consts.py)

COLLECTION_NAME = "my_first_collection"

EMBEDDING_DATA = {

"EMBEDDING_DATA1": "embedding1.csv",

"EMBEDDING_DATA2": "embedding2.csv",

"EMBEDDING_DATA3": "embedding3.csv"

}

Required Packages List (requirements.txt)

fastapi==0.101.0

uvicorn[standard]==0.23.2

gunicorn==21.2.0

langchain==0.0.100

qdrant-client==1.4.0

openai==0.27.8

pandas==2.0.3

How to run it?

Prerequisites

Install following tools and packages:

- Docker

- Docker Compose

- Python 3.9 and pip

- requirements.txt

Prepare your embedding data:

Setup

1. Data files

Create csv files and add them under embeddings directory.

Fow now, we have 3 files and their names should be:

- embedding1.csv

- embedding2.csv

- embedding3.csv

2. Environment variables

Create a file named .env from .env.example and fill in the values.

OPENAI_API_KEY: Key to connect to GPTQDRANT_HOST: Set it if using Qdrant cloudQDRANT_API_KEY: Set it if using Qdrant cloud

3. Start service as docker container

Run following command in the root directory of the project, it will build docker image and start service:

# Build and start the service

docker-compose up -d

# only build the image

docker-compose build

# only start the service

docker-compose up -d

# stop the service

docker-compose stop

This will start service on 127.0.0.1 on port 8080.

Deploy and Run

All endpoints are GET request.

Endpoints:

http://127.0.0.1:8080/health: Returnokmessage (for health check later)http://127.0.0.1:8080/count: Return number of vectors in databasehttp://127.0.0.1:8080/search?query=: Search similarity endpoint withqueryparameter for query string andtop_kparameter for number of results to return. Defaulttop_kis 20.- Example (with only

querystring):http://127.0.0.1:8080/search?query=VectorDB - Example (with only

querystring andtop_ksize):http://127.0.0.1:8080/search?query=VectorDB&top_k=1

Data initial load to Cloud

QDRANT_HOST="HOST_URL" QDRANT_API_KEY="API_KEY" python load.py

Notice

Deploying to Qdrant Cloud

Environment variables should be set in production environment.

In local development, docker-compose will read .env file and set environment variables for container.

Loading data

Currently, to make development simple, we have added /load endpoint for loading data from .csv files.

Conclusion and Summary

Qdrant is a powerful vector database tailored to meet the demands of modern AI and machine learning applications. Its ability to efficiently store and search high-dimensional data makes it indispensable for tasks involving similarity search. With features like high performance, scalability, and real-time updates, Qdrant stands out among vector databases.

While it can be resource-intensive and may have a steep learning curve, the benefits it offers make it a valuable tool for data scientists and developers. By leveraging Qdrant Cloud, developers can easily integrate vector search capabilities into their applications, enabling more accurate and efficient data retrieval.