GNN in Simple Terms

📌 The Evolution of Graph Neural Networks

Introduction

In the realm of artificial intelligence, Graph Neural Networks (GNNs) have emerged as a cutting-edge approach for handling data structured in graphs. These networks are adept at capturing complex relationships and patterns within data that traditional neural network models might miss. By leveraging the unique properties of graphs, GNNs offer a powerful tool for a variety of applications, from social network analysis to molecular structure examination.

Image source: Dall-E: Applications of Graph Neural Networks

Image source: Dall-E: Applications of Graph Neural Networks

Graph Neural Networks (GNNs) have revolutionized the way we approach machine learning for graph-structured data, offering unparalleled insights into complex relational patterns. This blog delves into their journey from conceptual frameworks to pivotal technologies across numerous domains.

The Evolution of Graph Neural Networks

The Genesis of GNNs

The inception of GNNs was driven by the need to process data represented as graphs, a common structure in numerous scientific and practical fields. Traditional neural networks faltered when faced with the irregularities of graph data, necessitating the development of GNNs. These networks are designed to understand the intricate relationships and dynamic interactions within graphs, making them a fundamental tool in modern AI research.

Understanding GNNs: From Theory to Application

At their core, GNNs utilize the concept of message passing, where nodes aggregate information from their neighbors, allowing for the dynamic updating of node representations. This section introduces the foundational concepts of graph theory that underpin GNNs, including nodes, edges, and how these elements contribute to the network's learning process.

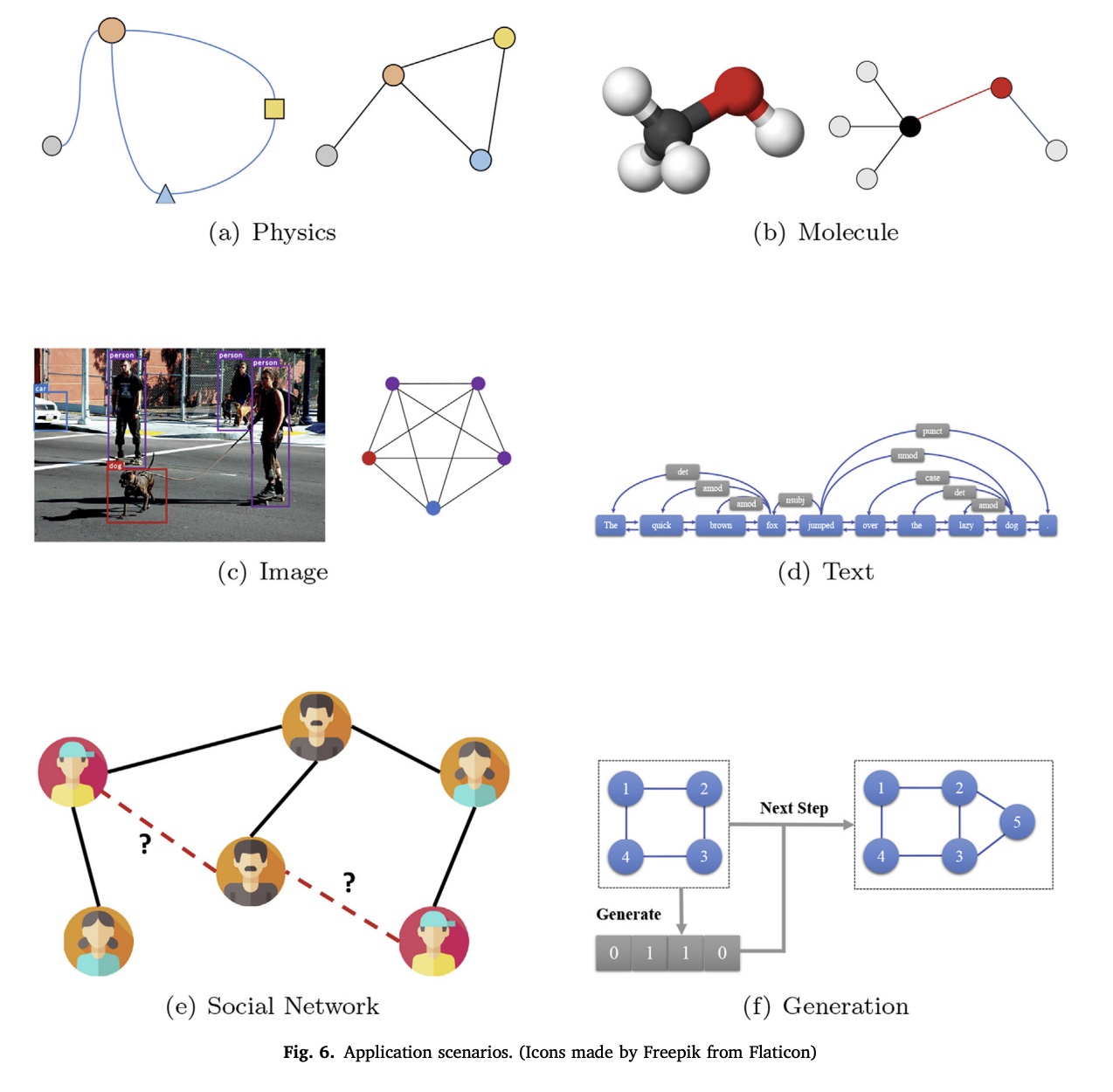

GNNs have found applications in a myriad of fields, demonstrating their versatility. This section provides a detailed look at how GNNs are used in drug discovery by modeling molecular structures, in social networks to analyze connectivity patterns, and in e-commerce to enhance recommendation systems. The transformative impact of GNNs across these domains showcases their ability to handle data's relational and structural complexities.

Image source: Graph neural networks: A review of methods and applications

Image source: Graph neural networks: A review of methods and applications

In drug discovery, GNNs are used to predict potential side effects of drugs by analyzing the complex interactions between proteins and chemical compounds, significantly speeding up the drug development process. GNNs offer significant advantages over traditional algorithms due to their ability to model the complex, non-Euclidean structures of molecular data. Unlike traditional methods that may overlook the intricate relationships and spatial arrangements between atoms and molecules, GNNs can capture these features through their graph-based approach. They effectively represent molecules as graphs, where nodes can be atoms and edges represent bonds, enabling the model to learn from the molecular structure directly. This allows for more accurate predictions of molecular properties, interactions, and potential drug efficacy, leading to more efficient and targeted drug discovery processes.

Image source: Graph neural networks: A review of methods and applications

Image source: Graph neural networks: A review of methods and applications

In social network analysis, GNNs can identify communities by learning node embeddings that cluster similar users together based on their connections and interactions within the network. GNNs excel over traditional algorithms primarily due to their ability to capture complex relational patterns and community structures inherent in social networks. Traditional methods may struggle with the dynamic and interconnected nature of social data, often failing to account for the depth of relationships and influence between entities. GNNs, through their graph-based approach, naturally model these networks, considering the connections and interactions between nodes (individuals or entities) to better understand and predict behaviors, trends, and influences within the network. This makes GNNs particularly effective for tasks such as community detection, influence prediction, and personalized recommendation systems in social networks.

And another example is the use of GNNs in autonomous driving. In the rapidly evolving field of autonomous driving, Graph Neural Networks (GNNs) combined with scene graph representations are revolutionizing how autonomous vehicles perceive, interpret, and interact with their complex surroundings. Scene graphs organize the myriad components of a road scene—vehicles, pedestrians, traffic signs—into a structured graph format, capturing the intricate relationships and dynamics at play.

Image source: roadscene2vec: A tool for extracting and embedding road scene-graphs

Image source: roadscene2vec: A tool for extracting and embedding road scene-graphs

GNNs excel in this scenario by efficiently processing these graph-structured data, enabling vehicles to handle complex relationships, adapt to varying scene sizes, learn meaningful feature representations, and reason about both spatial and temporal changes. This synergy enhances the vehicle's perception, prediction, and decision-making processes, paving the way for safer, more intelligent autonomous navigation systems. Through the lens of GNNs and scene graphs, we're witnessing a significant leap towards achieving human-like understanding and responsiveness in autonomous vehicles, ensuring they can navigate the complexities of real-world driving with unprecedented sophistication.

Architectural Innovations in GNNs

This part explores the diversity of GNN architectures, highlighting their evolution from simple recurrent models to sophisticated convolutional and attention-based designs. The spectral-based methods, which leverage the graph's Laplacian matrix, and spatial-based methods, which directly use the graph's structure, are compared to showcase their application-specific advantages.

Image source: Graph neural networks: A review of methods and applications

Image source: Graph neural networks: A review of methods and applications

The architecture of GNNs has evolved significantly, with key innovations including Graph Convolutional Networks (GCNs) that generalize convolutional operations to graph data, and Graph Attention Networks (GATs) that introduce attention mechanisms to weigh the importance of neighboring nodes dynamically.

Image source: Graph neural networks: A review of methods and applications

Image source: Graph neural networks: A review of methods and applications

GATs have been particularly effective in node classification tasks, such as classifying scientific papers in a citation network based on the content and citation relationships.

To suchmmerize, Graph Neural Networks (GNNs) have seen several innovative architectural advancements aimed at enhancing their performance, scalability, and applicability across a wide range of tasks. Here's an overview of some of the newest architectures and concepts in the GNN domain:

- Heterogeneous Graph Neural Networks (HGNNs): These architectures are designed to handle heterogeneous graphs, which contain multiple types of nodes and edges. HGNNs can capture the rich semantic relationships in such graphs, making them suitable for complex applications like knowledge graphs, recommendation systems, and more. Examples include Heterogeneous Graph Attention Network (HAN) and Heterogeneous Graph Transformer (HGT).

- Dynamic Graph Neural Networks: Recognizing that many real-world graphs are dynamic (changing over time), these GNN architectures are designed to adapt to graph changes. They can update their embeddings for nodes and edges as the graph evolves, making them ideal for temporal graph analysis, social network dynamics, and traffic forecasting.

- Graph Transformers: Inspired by the success of Transformers in natural language processing, Graph Transformers apply similar self-attention mechanisms to graph-structured data. These models can capture long-range dependencies within graphs and have shown impressive performance on various tasks. The Graphormer is a notable example, demonstrating significant improvements on standard benchmarks.

- Spatial-Temporal Graph Neural Networks (ST-GNNs): These models are specifically designed for spatial-temporal data, such as traffic networks and human activity recognition. They simultaneously capture spatial relationships (how entities are connected) and temporal dynamics (how these connections change over time), offering advanced insights for forecasting and behavior analysis.

- Neural Architecture Search (NAS) for GNNs: NAS techniques automate the design of optimal GNN architectures for specific tasks. By exploring a predefined search space, NAS can identify the most effective combination of GNN layers, pooling operations, and other architectural components, potentially uncovering new best practices for GNN design.

- Scalable GNNs: Addressing the challenge of applying GNNs to large-scale graphs, scalable GNN architectures focus on reducing computational complexity and memory usage. Techniques such as graph sampling, subgraph training, and hierarchical graph representations enable these models to handle massive graphs with millions of nodes and edges.

- Graph Contrastive Learning: This approach, which does not require labeled data, uses contrastive loss to learn node or graph embeddings by maximizing the agreement between similar pairs of graphs or nodes and minimizing it between dissimilar ones. It's a powerful method for learning representations in an unsupervised manner.

Each of these advancements targets specific challenges within the GNN domain, from handling heterogeneity and dynamics in graph data to improving model scalability and learning efficiency. As research progresses, we can expect further innovations that will expand the capabilities and applications of GNNs.

Challenges and Future Directions

Despite their advancements, GNNs face challenges such as handling large-scale graphs efficiently and improving model interpretability. Future research is directed towards overcoming these challenges, with efforts focused on scalable architectures, transfer learning, and explainable AI.

For example, scalability is being addressed through techniques like graph sampling and partitioning, which allow GNNs to train on subgraphs for improved efficiency without significant loss in performance.

Conclusion

Graph Neural Networks stand at the forefront of a paradigm shift in machine learning, offering a nuanced approach to data with inherent relational structures. As we continue to explore and expand upon GNNs, their role in advancing AI and computational sciences is undoubtedly significant. This exploration invites readers to consider the vast potential of GNNs, encouraging further research and application in this dynamic field.

References

- Graph neural networks: A review of methods and applications

- A Comprehensive Survey on Graph Neural Networks

- roadscene2vec: A tool for extracting and embedding road scene-graphs

- Heterogeneous Graph Neural Network

- Foundations and Modeling of Dynamic Networks Using Dynamic Graph Neural Networks: A Survey

- Do Transformers Really Perform Bad for Graph Representation?

- Spatial-temporal graph neural network for traffic forecasting: An overview and open research issues

- Auto-GNN: Neural architecture search of graph neural networks

- Scalable Graph Neural Networks via Bidirectional Propagation

- Graph Contrastive Learning with Augmentations