MLOps

📌 Let's recap on MLOps with the help of ChatGPT.

Foreword by ChatGPT

MLOps, also known as Machine Learning Operations, is a relatively new approach to managing and deploying machine learning models. MLOps combines practices and principles from software engineering and data science to ensure that machine learning models can be efficiently developed, tested, deployed, and monitored.

MLOps helps to streamline the development and deployment of machine learning models by creating a systematic approach to managing the entire machine learning pipeline. This pipeline includes data preparation, model training, model testing, model deployment, and model monitoring. MLOps ensures that all of these steps are managed effectively and efficiently to deliver the best possible results.

Contents

A summary of MLOps

- What is MLOps?

- Comparison between DevOps and MLOps

- Key Phases of MLOps

- Experiment Tracking

- Model Management

How to implement MLOps

- MLOps level 0

- MLOps level 1

- MLOps level 2

MLOps Best Practices in Few Words

- Team

- Data

- Objective (Metrics & KPIs)

- Model

- Code

- Deployment

A summary of MLOps

What is MLOps?

MLOps, short for Machine Learning Operations,

is a series of procedures and practices designed to ensure that machine learning models can be efficiently deployed and maintained in production.

Implementing these practices helps improve model quality, streamlines management processes,

and automates the deployment of ML models in large-scale production environments.

By adopting MLOps, businesses can ensure that their ML models are better aligned with their specific needs,

as well as with regulatory requirements.

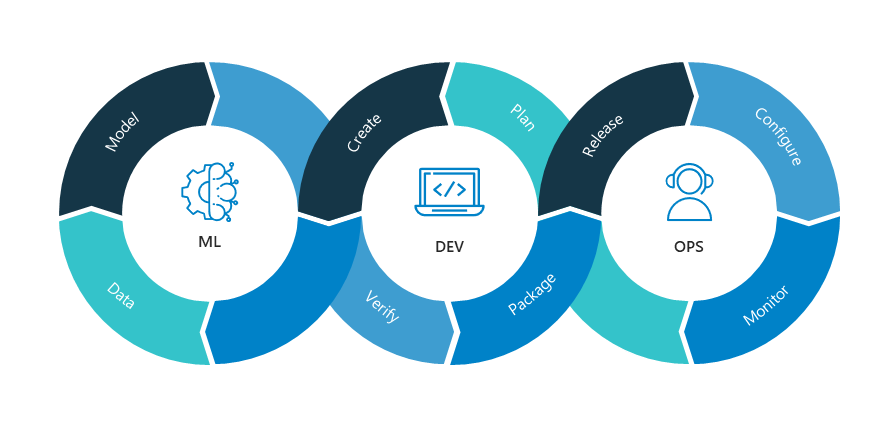

Image Source: NEAL ANALYTICS

Image Source: NEAL ANALYTICS

Comparison between DevOps and MLOps

In this section, we will explore the differences and similarities between DevOps and MLOps. Even though MLOps derives from DevOps principles, there are notable distinctions in their implementation.

Firstly, MLOps is characterized by its experimental nature, which sets it apart from DevOps. In particular, MLOps entails testing, automated deployment, and monitoring of ML systems, which are unique challenges that are not present in traditional software development.

In terms of similarities, both DevOps and MLOps emphasize the continuous integration of source control, unit testing, integration testing, and continuous delivery of software modules or packages. However, there are a few notable differences when it comes to ML systems. For instance, Continuous Integration (CI) in MLOps involves not only testing and validating code and components but also testing and validating data, data schemas, and models.

In addition, Continuous Deployment (CD) in MLOps is concerned with an ML training pipeline that automatically deploys another service (model prediction service) or rolls back changes from a model.

Lastly, Continuous Testing (CT) is a new property that is unique to ML systems.

This involves the automatic retraining and serving of models to address production performance degradation caused by evolving data profiles or simply training-serving skew.

Image Source: neptune.ai

Image Source: neptune.ai

Key Phases of MLOps

- Data gathering

- Data analysis

- Data transformation/preparation

- Model training & development

- Model validation

- Model serving

- Model monitoring

- Model re-training

Experiment Tracking

In the realm of MLOps, experiment tracking is an essential process that involves collecting, organizing, and tracking information related to model training. This information encompasses multiple runs with varying configurations, such as hyper-parameters, model size, data splits, and parameters. Due to the experimental nature of ML/DL, experiment tracking tools are used to benchmark different models created by different teams or team members, as well as different companies.

Model Management

Model management is another critical aspect of MLOps methodology that streamlines model training, packaging, validation, deployment, and monitoring. By implementing a clear and consistent methodology for model management, organizations can proactively address common business concerns such as regulatory compliance. Furthermore, it enables reproducible models by tracking data, models, code, and model versioning. Additionally, packaging and delivering models in repeatable configurations support reusability, which can accelerate the development of new ML applications.

How to implement MLOps

MLOps Level 0

In the world of machine learning, there are different levels of maturity that organizations can achieve. Level 0, also known as the nascent stage, is often the starting point for companies that are new to ML. At this stage, companies rely on a completely manual ML workflow, with data scientists driving the entire process. For companies with infrequent model changes or training needs, this level of maturity may suffice. However, as companies scale and their ML needs grow, more advanced levels of maturity are required to ensure success in the long run.

📌 Characteristics (Please check this blog for details)

- Manual operation, script-driven, and interactive process

- Disconnect between ML and operations

- Infrequent release iterations

- No Continuous Integration (CI)

- No Continuous Deployment (CD)

- Deployment refers to the prediction service

- Lack of active performance monitoring

MLOps level 1

At level 1 of MLOps maturity, the focus is on enabling continuous training of the model by automating the entire ML pipeline. This allows for the continuous delivery of model prediction services, which can be especially valuable for solutions operating in constantly changing environments. By proactively addressing shifts in customer behavior, price rates, and other indicators, companies can stay ahead of the competition and drive better outcomes for their business. With the right tools and processes in place, organizations can achieve this level of maturity and unlock the full potential of their ML initiatives.

📌 Characteristics (Please check this blog for details)

- Rapid experiment

- CT of the model in production

- Modularized code for components and pipelines

- Continuous delivery of models

- Deployment refers to the prediction service

- Pipeline deployment

- Additional components

- Data and model validation

- Feature Store

- Metadata management

- ML pipeline triggers

MLOps level 2

This level of MLOps maturity is particularly important for tech-driven companies that rely heavily on ML to power their operations. Without an end-to-end MLOps cycle, such organizations simply won't survive in today's hyper-competitive business landscape. By adopting a robust automated CI/CD system, these companies can stay ahead of the curve, continually innovate and improve their ML initiatives, and drive better outcomes for their business.

📌 Characteristics (Please check this blog for details)

- Development and experimentation

- Pipeline continuous integration

- Pipeline continuous delivery

- Automated triggering

- Model Continous delivery

- Monitoring

Some MLOps Best Practices from neptune.ai

Team

- Use a collaborative development platform

- Work against a shared backlog

- Communicate, align, and collaborate with others

Data

- Use sanity checks for all external data sources

- Track, identify, and account for changes in data sources

- Write reusable scripts for data cleaning and merging

- Combine and modify existing features to create new features in human-understandable ways

- Make data sets available on shared infrastructure (private or public)

- Objective (Metrics & KPIs)

- Choose a simple, observable, and attributable metric for your first objective

- Set governance objectives

- Enforce fairness and privacy

Model

- Keep the first model simple and get the infrastructure right

- Starting with an interpretable model makes debugging easier

- Training

- Capture the training objective in a metric that is easy to measure and understand

- Actively remove features that are not used

- Peer review training scripts

- Enable parallel training experiments

- Automate hyper-parameter optimisation

- Continuously measure model quality and performance

- Use versioning for data, model, configurations, and training scripts

Code

- Run automated regression tests

- Use static analysis to check code quality

- Use continuous integration

Deployment

- Plan to launch and iterate

- Automate model deployment

- Continuously monitor the behaviour of deployed models

- Enable automatic rollbacks for production models

- When performance plateaus, look for qualitatively new sources of information to add rather than refining existing signals.

- Enable shadow deployment

- Keep ensembles simple

- Log production predictions with the model’s version, code version, and input data